Openshift Troubleshooting and Operations

This article is part of a bigger document that I wrote for myself with notes about Cisco ACI, Openshift, HP Synergy, 3PAR integration and contains the part I found most challenging considering I learned it from 0 in a rather constraining time interval and with some pressure.

As such I felt the need to document it and do a sort of braindump in order to avoid reinventing the wheel in the future in case I start forgetting.

Openshift Operations

Delete ACI or other pod/container / trigger reinit / restart

oc delete pod aci-containers-host-xxxx -n aci-containers-system oc delete pod/

oc -n delete pod/

If container is part of a deployment and it has a replica set that specifies there should always be min = 1 running, then DELETING IT RESTARTS IT.

Upon restart, if it is the aci-container-controller, then it will connect to the APIC (can be seen in its logs from Openshift Master: oc logs

Is Fabric discovery for the Openshift integration of ACI working?

Look in the Synergy Tenant under EPGs: kube-nodes, kube-default, kube-system and see if in OPERATIONS you see things. Also check the Infra Tenant. As your new Openshift Nodes have Opflex container running inside the aci-container-host POD, they connect to the Fabric and should be visible there. Opflex will emulate a virtual Leaf and OVS will build VXLAN tunnels. Opflex handles the connection to the Fabric switches and pushing policies to OVS container.

Login to Openshift to allow giving commands with “oc” cmd

oc login -u system:admin -n default cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

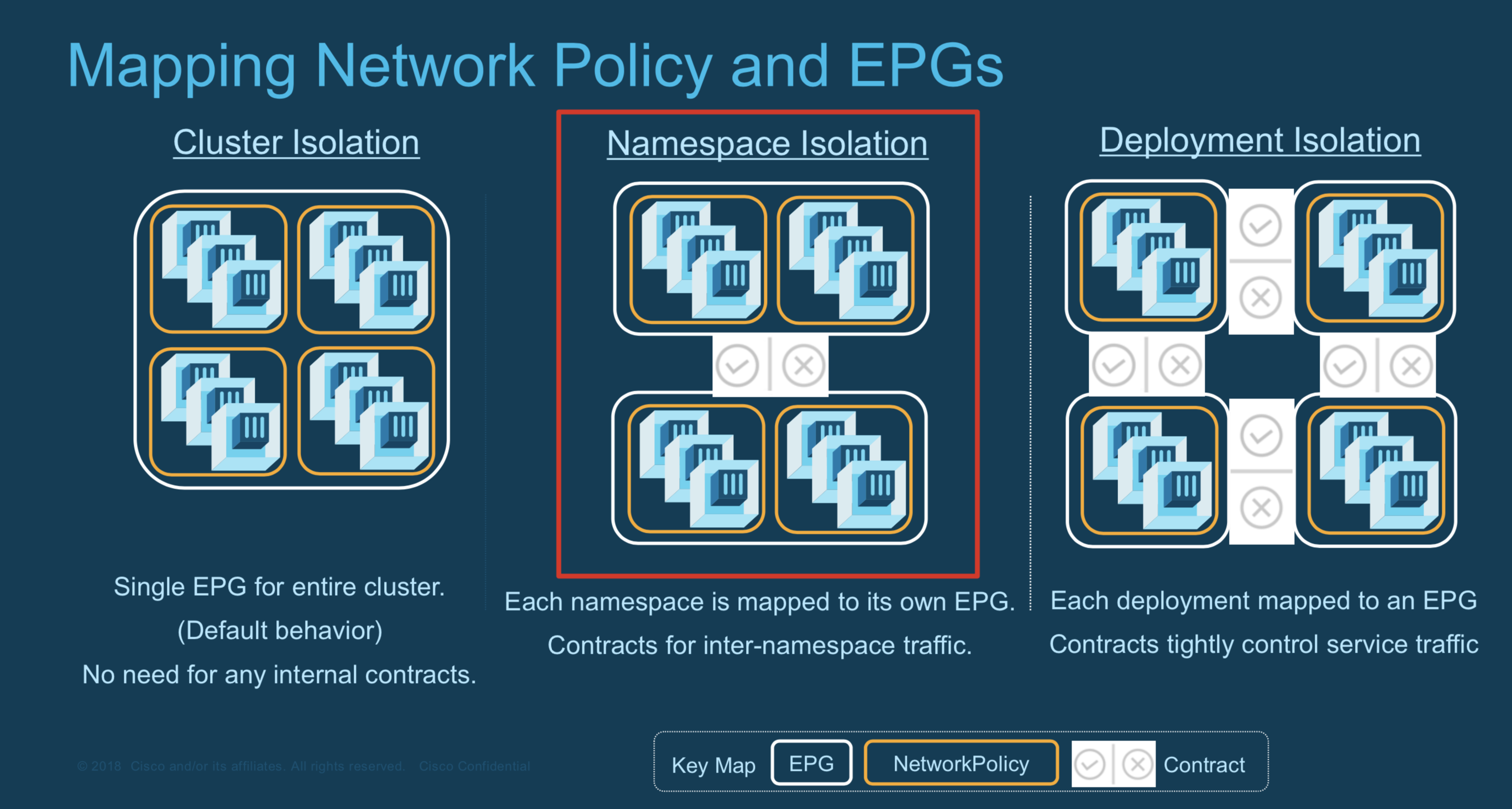

Put Openshift element (deployment/namespace/cluster) into EPG in ACI

Place into EPG the Openshift elements (separate at one of the levels mentioned below)

- Namespace

- Deployment

- Application

Steps required for all cases

- Create the EPG and add it to the bridge domain kube-pod-bd.

- Attach the EPG to the VMM domain.

- Configure the EPG to consume contracts in the OpenShift tenant:

- CONSUME: arp, dns, kube-api (optional)

- PROVIDE: arp, health-check ALTERNATIVE (less changes): Contract Ineritance -> new EPG inherits Contracts from “kube-default” EPG. https://www.cisco.com/c/en/us/td/docs/switches/datacenter/aci/apic/sw/1-x/aci-fundamentals/b_ACI-Fundamentals/b_ACI-Fundamentals_chapter_010001.html#id_51661

- Configure additional Contracts -> Internet ACCESS

- CONSUME: L3out contract (that is under L3out mentioned as PROVIDED)

Namespace

With OC

oc annotate namespace demo opflex.cisco.com/endpoint-group='{"tenant":"synergy_01","app-profile":"kubernetes","name":"EPG_Synergy_1"}'

namespace/demo annotated

restore

oc annotate namespace demo opflex.cisco.com/endpoint-group='{"tenant":"synergy_01","app-profile":"kubernetes","name":"kube-default"}' --overwrite

With acikubectl

acikubectl set default-eg namespace namespace -t kube -a app-profile -g EPG

Via yaml file

Through the .yaml file: annotations: opflex.cisco.com/endpoint-group: { “tenant”:“tenant”, “app-profile”:“app-profile”, “name”:“EPG”

Deployment

With OC

oc --namespace=namespace annotate deployment **deployment NAME** opflex.cisco.com/endpoint-group='{"tenant":"tenant","app-profile":"app-profile","name":"EPG"}'

With acikubectl

acikubectl set default-eg deployment deployment -n namespace -t kube -a app-profile -g EPG

OR

oc annotate pods mihai-test-1-8wcj9 opflex.cisco.com/endpoint-group='{"tenant":"synergy_01","app-profile":"kubernetes","name":"EPG_Synergy_1"}'

pod/mihai-test-1-8wcj9 annotated

Cluster

you can edit the deployment and set the annotations global. oc get dc

[root@master1 ~]# oc get dc

NAME REVISION DESIRED CURRENT TRIGGERED BY

mongodb 1 1 1 config,image(mongodb:3.2)

node1 1 2 2 config,image(node1:latest)

postgresql 1 1 1 config,image(postgresql:9.5)

rails-pgsql-persistent 1 1 0 config,image(rails-pgsql-persistent:latest)

oc edit dc/

Remove annotations from Node

oc edit node

Verify annotations

oc describe node

| grep cisco oc get pods -n -o wide | grep

Schedule workloads (pods/containers) OFF a Node (maintenance)

oc adm manage-node node2.corp --schedulable=false

Schedule back to Node

oc adm manage-node node2.corp --schedulable

Evacuate Node workloads (after schedulable was set to false / maintenance)

oc adm drain <node1> <node2> --force=true

Restart ACI containers manually

oc delete pod aci-containers-host-xxxx -n aci-containers-system If container is part of a deployment and it has a replica set that specifies there should always be min = 1 running, then DELETING IT RESTARTS IT. Upon restart, if it is the aci-container-controller, then it will connect to the APIC (can be seen in its logs from Openshift Master: oc logs

) In the case above it is one of the three containers running inside the same POD (along mcast-daemon and opflex) and which makes up the CNI/network connectivity for Openshift application PODs.

Make test Openshift app and expose to the outside (VIP on ACI, not OCRouter)

#make mysql app

oc new-app \

-e MYSQL_USER=admin \

-e MYSQL_PASSWORD=redhat \

-e MYSQL_DATABASE=mysqldb \

registry.access.redhat.com/openshift3/mysql-55-rhel7

# !!!!!!!!!!!!

# (-o yaml if you want to see what gets generated)

#assign VIP

oc expose dc mysql-55-rhel7 --type=LoadBalancer --name=mariadb-ingress

Make test Openshift app and show yaml file that can be used to do a similar app

oc new app from above with -o yaml added

Add Cluster Admin role to the GUI admin user

This is not the case by default

oc login -u system:admin -n default

system:admin is the main cluster admin. Also login via CLI is possible with it and it does not have an API token (for that we need a normal user). This you can validate by doing “oc whoami token”.

oc adm groups new admin admin

oc policy add-role-to-user admin -n default

oc policy add-role-to-user admin admin -n default

oc policy add-role-to-user admin admin -n management-infra

oc policy add-role-to-user admin admin -n openshift-monitoring

oc policy add-role-to-user admin admin -n openshift-infra

Where does OCrouter (haproxy) keep its settings

To check the apps that have been provisioned inside haproxy (domain name goes to which PODs = backends or specific URLs -> includes also the console GUI from Openshift)

Go to ocrouter pod

ssh root@master1

oc rsh ocrouter

ps ax | grep haproxy

cat /var/lib/haproxy/conf/haproxy.config

Connect inside a container

With OpenShift

oc -o get pods --all-namespaces | grep <your POD of interest>

oc -n <namespace> rsh <pod name> -c <container name \in \case you have more inside; example the aci-containers-host that has mcast-daemon, aci-container-host, opflex>

With Docker

ssh root@node1

docker ps | grep <name of the container/pod>

docker exec -it <that ID on the left from the former command> sh (or bash, depending what is available inside the container)

Run commands from Host OS inside the container Network Namespace (e.g.: tcpdump)

oc get pods --all-namespaces | grep <container>

#see the Node

ssh root@node1

docker ps | grep <name>

docker inspect <the ID on the left of the container name> | grep Pid

nsenter -t <the PiD> -n <Host OS command | tcpdump -ne -i eth0>

Edit Openshift Deployment config params - config map

oc get dc

[root@master1 ~]# oc get dc

NAME REVISION DESIRED CURRENT TRIGGERED BY

mongodb 1 1 1 config,image(mongodb:3.2)

node1 1 2 2 config,image(node1:latest)

postgresql 1 1 1 config,image(postgresql:9.5)

rails-pgsql-persistent 1 1 0 config,image(rails-pgsql-persistent:latest)

# example

oc edit dc/registry-console

Edit Openshift Replica Set

Replica set via Replication Controller component of Openshift ensures you are running a minimum number of copies of a PoD as per your specs.

oc get rs

oc edit rs/cluster-monitoring-operator-79d6c544f

Start a simple busybox POD/container in Openshift for tests

If you want to do some simple tests…see connectivity.

oc -n default run -i --tty --rm debug --image=busybox --restart=Never -- sh

List all block devices of all Openshift Nodes - see also what it sees as raw from 3PAR

Ansible inventory file although not shown here has all the server hostnames in it. It is the ansible file used to deploy Openshift which is also present in this document. The ansible command says: “use this file, take everything defined under the [nodes] section.”

ansible -i install-inventory-file-of-openshift nodes -m shell -a "lsblk"

node2.corp | SUCCESS | rc=0 >>

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 558.9G 0 disk

├─sda1 8:1 0 200M 0 part /boot/efi

├─sda2 8:2 0 1G 0 part /boot

└─sda3 8:3 0 557.7G 0 part

├─rhel-root 253:0 0 50G 0 lvm /

├─rhel-swap 253:1 0 4G 0 lvm [SWAP]

└─rhel-home 253:2 0 503.7G 0 lvm /home

sdc 8:32 0 894.3G 0 disk

sdd 8:48 0 894.3G 0 disk

sde 8:64 0 894.3G 0 disk

infra1.corp | SUCCESS | rc=0 >>

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 120G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 119G 0 part

├─rhel-root 253:0 0 50G 0 lvm /

├─rhel-swap 253:1 0 7.9G 0 lvm [SWAP]

└─rhel-home 253:2 0 61.1G 0 lvm /home

sdb 8:16 0 120G 0 disk

sr0 11:0 1 4.2G 0 rom

master1.corp | SUCCESS | rc=0 >>

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 120G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 119G 0 part

├─rhel-root 253:0 0 50G 0 lvm /

├─rhel-swap 253:1 0 7.9G 0 lvm [SWAP]

└─rhel-home 253:2 0 61.1G 0 lvm /home

sdb 8:16 0 120G 0 disk

sr0 11:0 1 4.2G 0 rom

...

sdc 8:32 0 894.3G 0 disk <-- **volumes from 3par, unlike sda = local disk**

sdd 8:48 0 894.3G 0 disk

sde 8:64 0 894.3G 0 disk

List volumes and their claims

You can make a volume (for example put the info about what you want in a yaml file and request one of 1G). The claim means a pod/container wants to use the created volume.

oc get pv

oc get pvc

oc describe pv/<name>

Delete PVC

oc get pvc

oc delete pvc/<pvc id>

Delete volume

oc delete pv/sc1-094eea61-9c99-11e9-85b5-566f4fb90000

Make sure there are no claims to it (delete pvc before).

List Projects / Switch to your OC Project (namespace)

oc get projects

oc project <the name>

# this makes that future commands no longer need to specify "-n namespace_name"

[root@master1 ~]# oc projects

You have access to the following projects and can switch between them with 'oc project <projectname>':

aci-containers-system

* default

demo

kube-proxy-and-dns

kube-public

kube-service-catalog

kube-system

management-infra

openshift

openshift-ansible-service-broker

openshift-console

openshift-infra

openshift-logging

openshift-monitoring

openshift-node

openshift-template-service-broker

openshift-web-console

operator-lifecycle-manager

Using project "default" on server "https://lb.blabla.corp:8443".

OC force delete PODs

https://access.redhat.com/solutions/2317401 Try adding –grace-period=0 to oc delete:

# oc delete pod example-pod-1 -n name --grace-period=0

Or try adding --force along with --grace-period:

# oc delete pod example-pod-1 -n name --grace-period=0 --force

OC force delete HANGED in Terminating project / POD

Sometimes we had the 3par storage/volume plugin have some issues because multipath -ll was no longer showing the 3PAR reachable via FC.

We suspect this to have been a server profile re-apply profile problem in the Synergy chassis but it did not come back after having bugged us for a few days.

When this happened, then any delete operation that included the volume somewhere (example: delete the POD, the pod has a volume mount) was freezing forever in Terminating state (as seen with “oc -n

Solution 1

Grant cluster admin role to admin.

We already had from the last stage after the installation:

#new group admin, user admin member and admin in those projects (default openshift ones)

oc adm groups new admin admin

oc policy add-role-to-user admin admin -n default

oc policy add-role-to-user admin admin -n management-infra

oc policy add-role-to-user admin admin -n openshift-monitoring

oc policy add-role-to-user admin admin -n openshift-infra

Add cluster admin role to user admin:

oadm policy add-cluster-role-to-user cluster-admin admin:admin

# (also for admin, think in the end it worked with system:admin better as more rights already)

The system:admin does not have an API token and the github link from below requires one when passing params to the script to force a delete (basically at the end it is editing the Service Instance responsible for your deployment and setting the state as Finalized):

https://github.com/jefferyb/useful-scripts/blob/master/openshift/force-delete-openshift-project

Get token for use with the script from here:

oc login -u admin:admin

oc whoami -t

remember at the end to login back as system:admin…otherwise some things will not work

Workaround to Solution 1

If still not enough rights via the API…then try to give admin user sudo rights in the API, then change in the code that the last API request of the script happens with sudo request.

$ oc adm policy add-cluster-role-to-user sudoer <username> --as system:admin

If you are using the REST API directly and need to perform an action as system:admin, you need to supply an additional header with the HTTP request against the REST API endpoint. The name of the header is Impersonate-User.

Take the curl line and add the Impersonate-User part to the github script.

#!/bin/sh

SERVER=oc whoami --show-server

TOKEN=oc whoami --show-token

USER="system:admin"

URL="$SERVER/api/v1/nodes"

curl -k -H "Authorization: Bearer $TOKEN" -H "Impersonate-User: $USER" $URL

Solution 2

Edit directly the Service Instance.

Try and find if a service instance exists and change there the Finalizer keyword

# in metadata section you find a Finalizer section, REMOVE

oc get serviceinstance --all-namespaces

oc edit serviceinstance/<name>

Analyze etcd for contents - looking for inconsistencies to undeletable objects

This is the Openshift etcd and not the one HP also deploys for its own storage of dory state.

source /etc/etcd/etcd.conf

etcdctl3 --cert=$ETCD_PEER_CERT_FILE --key=$ETCD_PEER_KEY_FILE --cacert=$ETCD_TRUSTED_CA_FILE \

--endpoints=$ETCD_LISTEN_CLIENT_URLS get / --prefix --keys-only > /var/tmp/datastore.data

And do a backup using ansible of Redhat

https://github.com/openlab-red/openshift-management https://github.com/openlab-red/openshift-management/blob/master/playbooks/openshift-backup/README.md

Login to Container as ROOT

## login as root inside

docker exec -u 0 -it <id> bash

Add Roles to User in Openshift

system:admin does not have a token output by “oc whoami -t”

oc login -u system:admin -n default

oc adm groups new admin admin

oc policy add-role-to-user admin admin -n default

oc policy add-role-to-user admin admin -n management-infra

oc policy add-role-to-user admin admin -n openshift-monitoring

oc policy add-role-to-user admin admin -n openshift-infra

Openshift Console not available

Openshift Logs

master-logs api

master-logs controllers controllers

/usr/local/bin/master-logs etcd etcd

See logs from Linux - journalctl

journalctl -xe

# see all logs in realtime from all units of systemd (including the openshift_atomic_node that starts the other things needed by Openshift on a Node)

journalctl -f

#specific unit

journalctl -u <name of unit> -f

#or -n 100 = last 100

See failed systemctl units (including the Openshift ones)

systemctl list-units --state=failed

systemctl status <name of service that you saw failed>

POD logs / Container logs

oc logs pod/<pod name>

If your OCH POD never got up and is in a funny state, then maybe it was not fully initialized => no logs, you need to go to the Node via ssh and use docker logs.

docker logs <container id from docker ps>

Nodes not seeing 3PAR via FC (multipath -ll not showing output)

chkconfig lldpad on

service lldpad start

service fcoe restart

multipath -d

multipath -l

lsscsi

OC display all Nodes

Displays the Openshift “physical-> in your case virtual” Nodes where you can run containers (PODs)

oc get nodes

[root@master1 ~]# oc get nodes

NAME STATUS ROLES AGE VERSION

infra1.corp Ready infra 10d v1.11.0+d4cacc0

infra2.corp Ready infra 10d v1.11.0+d4cacc0

master1.corp Ready master 10d v1.11.0+d4cacc0

master2.corp Ready master 10d v1.11.0+d4cacc0

master3.corp Ready master 10d v1.11.0+d4cacc0

node1.corp Ready compute 10d v1.11.0+d4cacc0

node2.corp Ready compute 10d v1.11.0+d4cacc0

oc get nodes -o wide

OC display all PODs

oc get pods => displays the PODs running

oc get pods -o wide

oc get pods --all-namespaces

oc -n <namespace> get pods

oc get pods --all-namespaces -o wide

[root@master1 ~]# oc get pods --all-namespaces -o wide

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

aci-containers-system aci-containers-controller-546dbcd788-2rs8g 1/1 Running 0 5d 172.22.252.134 node1.corp <none>

aci-containers-system aci-containers-host-5wg69 3/3 Running 1104 5d 172.22.252.134 node1.corp <none>

aci-containers-system aci-containers-host-79tg4 3/3 Running 0 5d 172.22.252.133 master3.corp <none>

aci-containers-system aci-containers-host-ddtc5 3/3 Running 0 5d 172.22.252.131 master1.corp <none>

aci-containers-system aci-containers-host-qnbzw 3/3 Running 0 5d 172.22.252.132 master2.corp <none>

aci-containers-system aci-containers-host-rgpz7 3/3 Running 0 5d 172.22.252.136 infra1.corp <none>

aci-containers-system aci-containers-host-tgtks 3/3 Running 0 5d 172.22.252.137 infra2.corp <none>

aci-containers-system aci-containers-host-wrdkp 3/3 Running 1145 5d 172.22.252.135 node2.corp <none>

aci-containers-system aci-containers-openvswitch-5dbkf 1/1 Running 0 5d 172.22.252.132 master2.corp <none>

aci-containers-system aci-containers-openvswitch-5ljz6 1/1 Running 0 5d 172.22.252.137 infra2.corp <none>

aci-containers-system aci-containers-openvswitch-66f48 1/1 Running 0 5d 172.22.252.133 master3.corp <none>

aci-containers-system aci-containers-openvswitch-9qqvf 1/1 Running 0 5d 172.22.252.134 node1.corp <none>

aci-containers-system aci-containers-openvswitch-hvt5z 1/1 Running 0 5d 172.22.252.131 master1.corp <none>

aci-containers-system aci-containers-openvswitch-j5q57 1/1 Running 0 5d 172.22.252.136 infra1.corp <none>

aci-containers-system aci-containers-openvswitch-prcdh 1/1 Running 0 5d 172.22.252.135 node2.corp <none>

default docker-registry-1-vsckt 1/1 Running 0 10d 10.2.0.136 infra1.corp <none>

default registry-console-1-8cxw6 1/1 Running 0 10d 10.2.0.2 master3.corp <none>

default router-1-l5q8z 1/1 Running 230 5d 10.2.0.104 node1.corp <none>

demo mongodb-1-deploy 1/1 Running 0 29s 10.2.0.169 node2.corp <none>

demo mongodb-1-rnrrs 0/1 ContainerCreating 0 26s <none> node1.corp <none>

demo node1-1-build 0/1 Completed 0 6m 10.2.0.182 node2.corp <none>

demo node1-1-httj4 1/1 Running 0 5m 10.2.0.102 node1.corp <none>

demo node1-1-hzwld 1/1 Running 0 2m 10.2.0.166 node2.corp <none>

demo postgresql-1-hjclt 1/1 Running 0 1m 10.2.0.168 node2.corp <none>

demo rails-pgsql-persistent-1-build 1/1 Running 0 1m 10.2.0.107 node1.corp <none>

kube-proxy-and-dns proxy-and-dns-bdcdt 1/1 Running 10 10d 172.22.252.135 node2.corp <none>

kube-proxy-and-dns proxy-and-dns-j65jl 1/1 Running 6 10d 172.22.252.134 node1.corp <none>

kube-proxy-and-dns proxy-and-dns-kp6nt 1/1 Running 0 10d 172.22.252.132 master2.corp <none>

kube-proxy-and-dns proxy-and-dns-l7qm9 1/1 Running 0 10d 172.22.252.137 infra2.corp <none>

kube-proxy-and-dns proxy-and-dns-pcks9 1/1 Running 0 10d 172.22.252.133 master3.corp <none>

kube-proxy-and-dns proxy-and-dns-q647m 1/1 Running 0 10d 172.22.252.136 infra1.corp <none>

kube-proxy-and-dns proxy-and-dns-xcxx9 1/1 Running 0 10d 172.22.252.131 master1.corp <none>

kube-service-catalog apiserver-4c8wl 1/1 Running 0 10d 10.2.0.42 master2.corp <none>

kube-service-catalog apiserver-fk7xs 1/1 Running 0 10d 10.2.0.5 master3.corp <none>

kube-service-catalog apiserver-vjhvz 1/1 Running 0 10d 10.2.0.68 master1.corp <none>

kube-service-catalog controller-manager-9b2gf 1/1 Running 0 10d 10.2.0.69 master1.corp <none>

kube-service-catalog controller-manager-9fl5l 1/1 Running 3 10d 10.2.0.6 master3.corp <none>

kube-service-catalog controller-manager-lqbdt 1/1 Running 0 10d 10.2.0.43 master2.corp <none>

kube-system master-api-master1.corp 1/1 Running 1 10d 172.22.252.131 master1.corp <none>

kube-system master-api-master2.corp 1/1 Running 0 10d 172.22.252.132 master2.corp <none>

kube-system master-api-master3.corp 1/1 Running 0 10d 172.22.252.133 master3.corp <none>

kube-system master-controllers-master1.corp 1/1 Running 2 10d 172.22.252.131 master1.corp <none>

kube-system master-controllers-master2.corp 1/1 Running 0 10d 172.22.252.132 master2.corp <none>

kube-system master-controllers-master3.corp 1/1 Running 0 10d 172.22.252.133 master3.corp <none>

kube-system master-etcd-master1.corp 1/1 Running 0 10d 172.22.252.131 master1.corp <none>

kube-system master-etcd-master2.corp 1/1 Running 0 10d 172.22.252.132 master2.corp <none>

kube-system master-etcd-master3.corp 1/1 Running 0 10d 172.22.252.133 master3.corp <none>

openshift-ansible-service-broker asb-1-deploy 0/1 Error 0 10d 10.2.0.200 infra2.corp <none>

openshift-console console-7bc6d8fc88-7n5pv 1/1 Running 0 10d 10.2.0.41 master2.corp <none>

openshift-console console-7bc6d8fc88-sv7fr 1/1 Running 0 10d 10.2.0.67 master1.corp <none>

openshift-console console-7bc6d8fc88-wmwdm 1/1 Running 0 10d 10.2.0.4 master3.corp <none>

openshift-monitoring alertmanager-main-0 3/3 Running 0 10d 10.2.0.197 infra2.corp <none>

openshift-monitoring alertmanager-main-1 3/3 Running 0 10d 10.2.0.140 infra1.corp <none>

openshift-monitoring alertmanager-main-2 3/3 Running 0 10d 10.2.0.198 infra2.corp <none>

openshift-monitoring cluster-monitoring-operator-6f5fbd6f8b-9kxbn 1/1 Running 0 10d 10.2.0.137 infra1.corp <none>

openshift-monitoring grafana-857fc848bf-9mzbt 2/2 Running 0 10d 10.2.0.138 infra1.corp <none>

openshift-monitoring kube-state-metrics-88548b8d-jr7jf 3/3 Running 0 10d 10.2.0.199 infra2.corp <none>

openshift-monitoring node-exporter-2bcp6 2/2 Running 0 10d 172.22.252.131 master1.corp <none>

openshift-monitoring node-exporter-5l4qc 2/2 Running 0 10d 172.22.252.133 master3.corp <none>

openshift-monitoring node-exporter-hmnkd 2/2 Running 6 10d 172.22.252.135 node2.corp <none>

openshift-monitoring node-exporter-hmnmj 2/2 Running 0 10d 172.22.252.132 master2.corp <none>

openshift-monitoring node-exporter-lw4kd 2/2 Running 11 10d 172.22.252.134 node1.corp <none>

openshift-monitoring node-exporter-qlhl5 2/2 Running 0 10d 172.22.252.137 infra2.corp <none>

openshift-monitoring node-exporter-v24jc 2/2 Running 0 10d 172.22.252.136 infra1.corp <none>

openshift-monitoring prometheus-k8s-0 4/4 Running 1 10d 10.2.0.139 infra1.corp <none>

openshift-monitoring prometheus-k8s-1 4/4 Running 1 10d 10.2.0.196 infra2.corp <none>

openshift-monitoring prometheus-operator-7855c8646b-gkf25 1/1 Running 0 10d 10.2.0.195 infra2.corp <none>

openshift-node sync-7z7sm 1/1 Running 26 10d 172.22.252.134 node1.corp <none>

openshift-node sync-9tbvr 1/1 Running 0 10d 172.22.252.133 master3.corp <none>

openshift-node sync-hhfcx 1/1 Running 0 10d 172.22.252.132 master2.corp <none>

openshift-node sync-ph4p5 1/1 Running 0 10d 172.22.252.131 master1.corp <none>

openshift-node sync-t8qt8 1/1 Running 21 10d 172.22.252.135 node2.corp <none>

openshift-node sync-t9575 1/1 Running 0 10d 172.22.252.137 infra2.corp <none>

openshift-node sync-tfmg5 1/1 Running 0 10d 172.22.252.136 infra1.corp <none>

openshift-template-service-broker apiserver-5l9h4 1/1 Running 6 10d 10.2.0.7 master3.corp <none>

openshift-template-service-broker apiserver-m6fxc 1/1 Running 0 10d 10.2.0.70 master1.corp <none>

openshift-template-service-broker apiserver-txn9r 1/1 Running 6 10d 10.2.0.44 master2.corp <none>

openshift-web-console webconsole-7f7f679596-96925 1/1 Running 0 10d 10.2.0.40 master2.corp <none>

openshift-web-console webconsole-7f7f679596-dgc6l 1/1 Running 0 10d 10.2.0.3 master3.corp <none>

openshift-web-console webconsole-7f7f679596-q9s9q 1/1 Running 0 10d 10.2.0.66 master1.corp <none>

operator-lifecycle-manager catalog-operator-54f866c7d5-wp7x9 1/1 Running 372 10d 10.2.0.106 node1.corp <none>

operator-lifecycle-manager olm-operator-55fd8f9bbb-vfwd4 1/1 Running 396 10d 10.2.0.165 node2.corp <none>

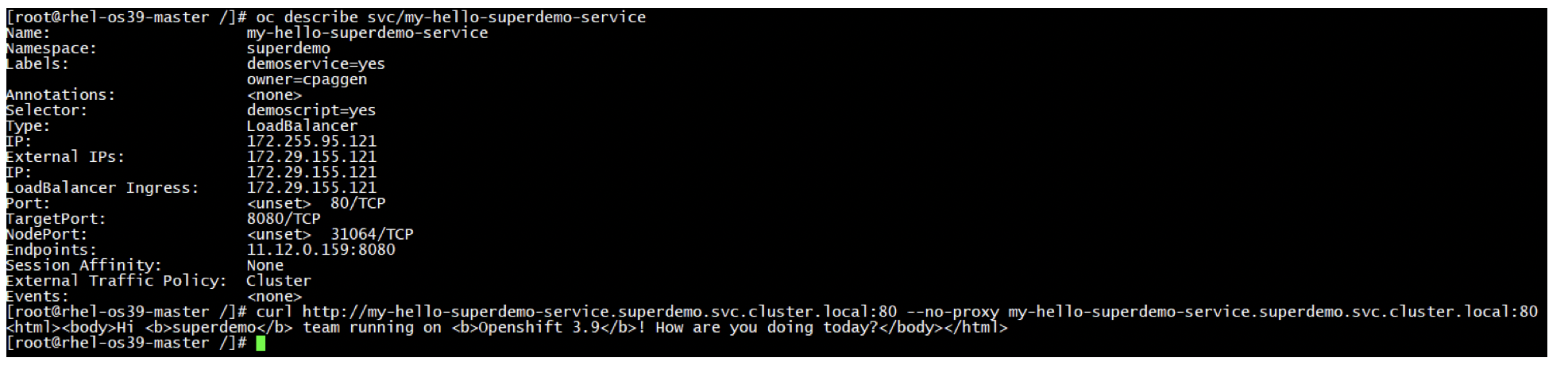

OC get services (LoadBalancer/Ingress/ClusterIP)

oc get svc -o wide

#get services..like for example a load balancer service...traffic arrives on a “public” IP VIP and gets redirected to the PODs/containers that belong to the application

[root@master1 ~]# oc get svc --all-namespaces

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default docker-registry ClusterIP 10.3.133.165 <none> 5000/TCP 10d

default kubernetes ClusterIP 10.3.0.1 <none> 443/TCP,53/UDP,53/TCP 10d

default registry-console ClusterIP 10.3.87.121 <none> 9000/TCP 10d

default router LoadBalancer 10.3.248.68 172.22.253.21,172.22.253.21 80:31903/TCP,443:31266/TCP,1936:32547/TCP 5d

demo mongodb ClusterIP 10.3.188.23 <none> 27017/TCP 1m

demo node1 ClusterIP 10.3.241.45 <none> 8080/TCP 7m

kube-service-catalog apiserver ClusterIP 10.3.150.244 <none> 443/TCP 10d

kube-service-catalog controller-manager ClusterIP 10.3.118.135 <none> 443/TCP 10d

kube-system kube-controllers ClusterIP None <none> 8444/TCP 10d

kube-system kubelet ClusterIP None <none> 10250/TCP 10d

openshift-ansible-service-broker asb ClusterIP 10.3.8.118 <none> 1338/TCP,1337/TCP 10d

openshift-console console ClusterIP 10.3.190.248 <none> 443/TCP 10d

openshift-monitoring alertmanager-main ClusterIP 10.3.123.212 <none> 9094/TCP 10d

openshift-monitoring alertmanager-operated ClusterIP None <none> 9093/TCP,6783/TCP 10d

openshift-monitoring cluster-monitoring-operator ClusterIP None <none> 8080/TCP 10d

openshift-monitoring grafana ClusterIP 10.3.41.181 <none> 3000/TCP 10d

openshift-monitoring kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 10d

openshift-monitoring node-exporter ClusterIP None <none> 9100/TCP 10d

openshift-monitoring prometheus-k8s ClusterIP 10.3.244.92 <none> 9091/TCP 10d

openshift-monitoring prometheus-operated ClusterIP None <none> 9090/TCP 10d

openshift-monitoring prometheus-operator ClusterIP None <none> 8080/TCP 10d

openshift-template-service-broker apiserver ClusterIP 10.3.87.197 <none> 443/TCP 10d

openshift-web-console webconsole ClusterIP 10.3.41.40 <none> 443/TCP 10d

Change project

To change project/namespace:

“oc project <name of namespace>”

Show configuration parameters of the installation

oc -n openshift-node get cm

oc -n openshift-node describe cm

Get more data about pods/nodes/svc

oc -n <namespace name> describe <svc | pod | nodes>

Replica Sets - how many instances of a POD should exist

oc get rs

oc edit rs/<name from above>

Spawn a linux POD/container with iperf only installed in it

The node-role nodeSelector field tells the scheduler where it wants the POD to be running (on Computes as usual or maybe try to force it on Infra?). For running on Masters you would have to “taint” them to allow it. Search for the word “taint” in this big document. Make a yaml file:

[root@ocp-master ~]# cat test2.yaml

apiVersion: v1

kind: Pod

metadata:

name: test-pod

namespace: default

spec:

containers:

- name: test-pod

image: jlage/ubuntu-iperf3

command: ["/usr/bin/iperf3"]

args: ["-s"]

ports:

- containerPort: 5201

name: iperf3

protocol: TCP

nodeSelector:

- node-role.kubernetes.io/compute: "true"

apply or create

oc apply -f test2.yaml

see how it got created or what it is doing by

oc get pods (running is the final state = ok)

Openshift App Deploy Flow / Logic

After oc apply -f

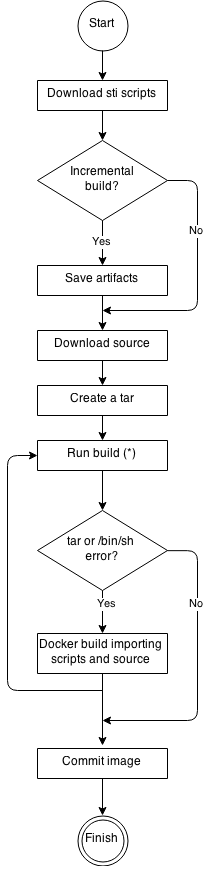

Openshift S2I that builds images from github source code

You can do an experiment by yourself to see how this works. Official docs

s2i build https://github... centos/httpd-24-centos7 myhttpdimage

docker run -p 8080:8080 myhttpdimage

Openshift error with cannot contact 10.3.0.1 in the ACI plugin case that builds VXLAN tunnels to the Fabric/Leaf switches

This is in fact the ClusterIP of the API on Masters. If a POD reports in the logs this error, then start a busybox POD:

oc run --rm -it --image=busybox bb /bin/sh

# try to do wget https://10.3.0.1 (or what the LOG from the other non-working POD reported)

# CHECK MTU on Node as this is normally controlled from OVS RULES plus VXLAN being built and not dropped

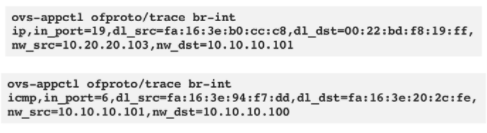

As extras you can use:

- tcpdump to see what happens with the traffic

- check MTU is 1600 on infra-vlan at least (better is to increase it on all interfaces for homogenity)

- go to ovs container on the Node and dump the rules

- try to simulate the same traffic in OVS container with the help of the appctl utility

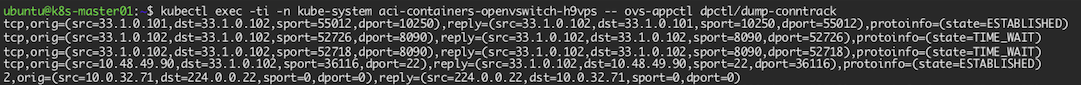

Dump OVS Conntrack Table (existing and tracked connections)

Check OVS rules and use appctl to simulate traffic and see what the end effect is (forwarded, dropped)

kubectl exec -ti -n kube-system aci-containers-openvswitch -- ovs-ofctl -O Openflow13 dump-flows br-int

grep on POD IP in the above command MUIE appctl example usage to simulate and see what OVS would do with such a packet this is easier/nicer than dumping all the rules from all tables and then starting to retrace the logic for a certain type of packet.

Cisco ACI CNI - check values passed to plugin

oc -n=aci-containers-system describe configmap/aci-containers-config

Openshift describe application, see all details

For DNS resolution, if it’s regarding internal application accessing internal application (like API on Masters) then request goes to what is put in the POD in /etc/resolv.conf, there it usually has the Node IP of the HOST you are on, that one receives the requests in its Dnsmasq, then if it is for something as cluster.local, then it forwards the DNS Query to SkyDNS running on 127.0.0.1:53 on the same Host.

Techsupport in case of Cloud Part/Openshift for ACI CNI integration

ACI

APIC -> Admin Section

Console -> show techsupport

CLUSTER

acikubectl debug cluster-report --output cluster-report.tar.gz [ --kubeconfig config]

Label an Openshift Node as compute, making it schedule Loads

By default Masters are annotated to be master and Infras to infra.

If you do oc edit

oc label node <node-hostname> node-role.kubernetes.io/compute=true

Make Openshift schedule loads / PODs on Masters also

This is called tainting. A taint allows a node to refuse pod to be scheduled unless that pod has a matching toleration. You apply taints to a node through the node specification (NodeSpec) and apply tolerations to a pod through the pod specification (PodSpec). A taint on a node instructs the node to repel all pods that do not tolerate the taint. Read More: Taints and Specs

kubectl taint nodes --all node-role.kubernetes.io/master-

Try to Deploy an App on Infra Nodes instead of Computes by changing the node selector

apiVersion: v1

kind: Pod

metadata:

name: test-pod

namespace: default

spec:

containers:

- name: test-pod

image: jlage/ubuntu-iperf3

command: ["/usr/bin/iperf3"]

args: ["-s"]

ports:

- containerPort: 5201

name: iperf3

protocol: TCP

nodeSelector:

- node-role.kubernetes.io/infra: "true"

Manually import from Docker registry into your local registry - Image Stream

oc import-image <image-stream-name>:<tag> --from=docker image repo --confirm

See details of Image Streams

Image Streams refer to containers stored on the local registry and which share a common attribute (perhaps all the ones with tag = version 3). In this local registry you end up having images that get created from BUILD CONTAINER + source code fetched from github for your specific app, let’s say Nginx. See an example here, output of “oc describe is” command

root@master1 ~]# oc describe is

Name: mihai-test

Namespace: demo

Created: 4 hours ago

Labels: app=mihai-test

Annotations: openshift.io/generated-by=OpenShiftWebConsole

Docker Pull Spec: docker-registry.default.svc:5000/demo/mihai-test

Image Lookup: local=false

Unique Images: 1

Tags: 1

latest

no spec tag

* docker-registry.default.svc:5000/demo/mihai-test@sha256:bf387b76041c3d51be162ce6acc47c3628300066d898fc22de20118fd0d16288

4 hours ago

Name: node1

Namespace: demo

Created: 2 hours ago

Labels: app=node1

Annotations: openshift.io/generated-by=OpenShiftWebConsole

Docker Pull Spec: docker-registry.default.svc:5000/demo/node1

Image Lookup: local=false

Unique Images: 1

Tags: 1

latest

no spec tag

* docker-registry.default.svc:5000/demo/node1@sha256:ea9e746fd37754c500975bff45309b2b0c6dae4463185111c58143946590e0a1

2 hours ago

Name: nodejs-ex

Namespace: demo

Created: 11 days ago

Labels: app=nodejs-ex

Annotations: openshift.io/generated-by=OpenShiftWebConsole

Docker Pull Spec: docker-registry.default.svc:5000/demo/nodejs-ex

Image Lookup: local=false

Tags: <none>

Name: nodejs-ex-mihai-2

Namespace: demo

Created: 2 hours ago

Labels: app=nodejs-ex-mihai-2

Annotations: openshift.io/generated-by=OpenShiftWebConsole

Docker Pull Spec: docker-registry.default.svc:5000/demo/nodejs-ex-mihai-2

Image Lookup: local=false

Unique Images: 2

Tags: 1

latest

no spec tag

* docker-registry.default.svc:5000/demo/nodejs-ex-mihai-2@sha256:cedd032908c6722aea5ad1d881eabb4ad18c4734fd9b8070b963904eaf8cd65d

2 hours ago

docker-registry.default.svc:5000/demo/nodejs-ex-mihai-2@sha256:c6c0406ad08aadfe271b96bf958ac9926da98bf2c9926515b57b57524938120e

2 hours ago

Name: nodejs-ex-mihai-test

Namespace: demo

Created: 5 days ago

Labels: app=nodejs-ex-mihai-test

Annotations: openshift.io/generated-by=OpenShiftWebConsole

Docker Pull Spec: docker-registry.default.svc:5000/demo/nodejs-ex-mihai-test

Image Lookup: local=false

Unique Images: 1

Tags: 1

latest

no spec tag

* docker-registry.default.svc:5000/demo/nodejs-ex-mihai-test@sha256:1d9bb53653bcaddb380a36ca8cb1d1c8723551aa7a17751965f36e9f90c9d233

5 days ago

More low level - directly docker images

You will find the busybox that I deployed. Last one ubuntu-iperf is also what I deployed via an YAML and is fetched remotely but then put into an image stream specific to it on the local registry.

root@master1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

docker.io/busybox latest e4db68de4ff2 3 weeks ago 1.22 MB

registry.redhat.io/openshift3/ose-node v3.11 85e87675ef7b 3 weeks ago 1.2 GB

registry.redhat.io/openshift3/ose-control-plane v3.11 bb262ffdc4ff 3 weeks ago 820 MB

registry.redhat.io/openshift3/ose-pod v3.11 8d0bf3c3b7f3 3 weeks ago 250 MB

registry.redhat.io/openshift3/ose-pod v3.11.117 8d0bf3c3b7f3 3 weeks ago 250 MB

registry.redhat.io/openshift3/ose-node v3.11.104 be8a09b5514c 6 weeks ago 1.97 GB

registry.redhat.io/openshift3/ose-control-plane v3.11.104 c33fa4c530a3 6 weeks ago 1.6 GB

registry.redhat.io/openshift3/ose-deployer v3.11.104 1500740029de 6 weeks ago 1.16 GB

registry.redhat.io/openshift3/ose-console v3.11 6e555a73ff6e 6 weeks ago 1.05 GB

registry.redhat.io/openshift3/ose-console v3.11.104 6e555a73ff6e 6 weeks ago 1.05 GB

registry.redhat.io/openshift3/ose-pod v3.11.104 6759d8752074 6 weeks ago 1.03 GB

registry.redhat.io/openshift3/ose-service-catalog v3.11 410f55e8c706 6 weeks ago 1.1 GB

registry.redhat.io/openshift3/ose-service-catalog v3.11.104 410f55e8c706 6 weeks ago 1.1 GB

registry.redhat.io/openshift3/ose-web-console v3.11 4c147a14b66f 6 weeks ago 1.12 GB

registry.redhat.io/openshift3/ose-web-console v3.11.104 4c147a14b66f 6 weeks ago 1.12 GB

registry.redhat.io/openshift3/ose-kube-rbac-proxy v3.11 cdfa9d0da060 6 weeks ago 1.06 GB

registry.redhat.io/openshift3/ose-kube-rbac-proxy v3.11.104 cdfa9d0da060 6 weeks ago 1.06 GB

registry.redhat.io/openshift3/ose-template-service-broker v3.11 e0f28a2f2555 6 weeks ago 1.11 GB

registry.redhat.io/openshift3/ose-template-service-broker v3.11.104 e0f28a2f2555 6 weeks ago 1.11 GB

registry.redhat.io/openshift3/ose-logging-fluentd v3.11 56eef41ced15 6 weeks ago 1.08 GB

registry.redhat.io/openshift3/ose-logging-fluentd v3.11.104 56eef41ced15 6 weeks ago 1.08 GB

registry.redhat.io/openshift3/registry-console v3.11.104 38a5af0ed6c5 6 weeks ago 1.03 GB

registry.redhat.io/openshift3/prometheus-node-exporter v3.11 0f508556d522 6 weeks ago 1.02 GB

registry.redhat.io/openshift3/prometheus-node-exporter v3.11.104 0f508556d522 6 weeks ago 1.02 GB

registry.redhat.io/rhel7/etcd 3.2.22 d636cc8689ea 2 months ago 259 MB

docker.io/nilangekarswapnil/legacy rogerpoc 189a36e6cc18 2 months ago 578 MB

docker.io/noiro/cnideploy 4.1.1.2.r13 0ec01e31d5ca 3 months ago 51.1 MB

docker.io/noiro/aci-containers-host 4.1.1.2.r13 a2956ca08f6c 3 months ago 35.3 MB

docker.io/noiro/opflex 4.1.1.2.r12 6f916e70e1d9 3 months ago 37.5 MB

docker.io/noiro/openvswitch 4.1.1.2.r11 097132e89f0e 3 months ago 25.2 MB

docker.io/hpestorage/legacyvolumeplugin 3.0 22d7ab72c68f 7 months ago 550 MB

quay.io/coreos/etcd v2.2.0 ee946ee864ee 3 years ago 27.4 MB

docker.io/jlage/ubuntu-iperf3 latest 83856b0bafed 15 months ago 278 MB

Simple tshoot inside a container - ARP, Routing Table, Open ports

Most containers are pretty limited and lack the basic utilities but those take the information from /proc and just format it nice for the end-user. ARP table cat /proc/net/arp Routing Table cat /proc/net/route this one will display in HEX and in little endian => you have to read it from right to left when converting to DEC Netstat, open ports cat /proc/net/netstat

ACI CNI - container logs for aci-containers-host running on each Node

This is the one that handles the CNI, IPAM, ovs rules (opflex) part. There is also another aci-containers-controllers that speaks to the APIC and K8S APIs. Also, on each node an OVS/OpenvSwitch container.

oc get pod -o=wide -n=aci-containers-system

oc logs pod/aci-containers-host-ID -c=aci-containers-host

oc logs pod/aci-containers-host-ID -c=opflex-agent

oc logs pod/aci-containers-host-ID -c=mcast-daemon

Any errros on Linux interfaces on hosts? drops?

netstat -in

ip -s -s link show dev <device>

netstat -s // per protocol statistics

netstat -i –udp <interface with vlan 3967>

watch -d "cat /proc/net/snmp | grep -w Udp"

ethtool –g <interface>

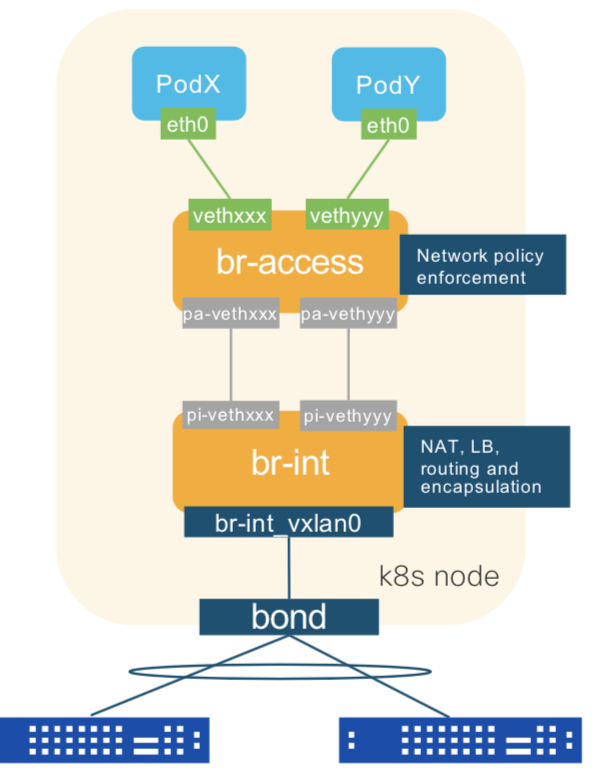

OpenvSwitch deployed by ACI CNI for Openshift -> dig in

This is running on each Node (Infra/Master/Worker) inside its own container BUT HAS its network namespace NOT isolated but bound to the Host OS => uses Node IP for initiating VXLAN tunnels.

Display Bridges (br-int / br-access)

ovs-vsctl show

You should have a br-int and a br-access. br-access = Firewall rules br-int = where the containers plug in with their veth interfaces and where vxlan happens (br-int has a port that is the physical OS interface)

38243bd7-d01a-46ea-abc1-c1593b88d0fe

Bridge br-int

fail_mode: secure

Port "vxlan0"

Interface "vxlan0"

type: vxlan

options: {dst_port="8472", key=flow, remote_ip=flow}

Port "pi-veth3de769f4"

Interface "pi-veth3de769f4"

type: patch

options: {peer="pa-veth3de769f4"} <-- patch port

Port "eth1"

Interface "eth1"

Port "pi-vethab403f0b"

Interface "pi-vethab403f0b"

type: patch

options: {peer="pa-vethab403f0b"}

Port "pi-veth4773cb0d"

Interface "pi-veth4773cb0d"

type: patch

options: {peer="pa-veth4773cb0d"}

Port "pi-veth0b29d1a4"

Interface "pi-veth0b29d1a4"

type: patch

options: {peer="pa-veth0b29d1a4"}

Port br-int

Interface br-int

type: internal

Port "pi-veth703dc804"

Interface "pi-veth703dc804"

type: patch

options: {peer="pa-veth703dc804"}

Bridge br-access

fail_mode: secure

Port "veth4773cb0d"

Interface "veth4773cb0d"

Port "veth703dc804"

Interface "veth703dc804"

Port "veth0b29d1a4"

Interface "veth0b29d1a4"

Port "pa-vethab403f0b"

Interface "pa-vethab403f0b"

type: patch

options: {peer="pi-vethab403f0b"}

Port "veth3de769f4"

Interface "veth3de769f4"

Port "pa-veth0b29d1a4"

Interface "pa-veth0b29d1a4"

type: patch

options: {peer="pi-veth0b29d1a4"}

Port "vethab403f0b"

Interface "vethab403f0b"

Port "pa-veth4773cb0d"

Interface "pa-veth4773cb0d"

type: patch

options: {peer="pi-veth4773cb0d"}

Port "pa-veth3de769f4"

Interface "pa-veth3de769f4"

type: patch

options: {peer="pi-veth3de769f4"} <-- the other part of the Patch

Port br-access

Interface br-access

type: internal

Port "pa-veth703dc804"

Interface "pa-veth703dc804"

type: patch

options: {peer="pi-veth703dc804"}

ovs_version: "2.10.1"

ovs-ofctl show br-int

shows OpenvSwitch port NUMBERS (the ones you see above like br-access, pa-veth703dc804) These numbers can be seen when dumping the OVS rules tables (rules that say what to do with certain type of traffic when entering an interface. E.g.: change source IP, tunnel VXLAN, DROP). You notice that some of them have peer=“pi-something” If you track this down you will see that for each container you will have a veth pair that connects br-access with br-int. Yes, it would be possible to have just ONE veth pair br-access to br-int for ALL containers but it was NOT the choice made for this implementation (separation of traffic, segmentation).

OFPT_FEATURES_REPLY (xid=0x2): dpid:0000000000000001

n_tables:254, n_buffers:0

capabilities: FLOW_STATS TABLE_STATS PORT_STATS QUEUE_STATS ARP_MATCH_IP

actions: output enqueue set_vlan_vid set_vlan_pcp strip_vlan mod_dl_src mod_dl_dst mod_nw_src mod_nw_dst mod_nw_tos mod_tp_src mod_tp_dst

1(pi-veth3de769f4): addr:da:60:86:47:f7:a7

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

2(eth1): addr:56:6f:4f:b9:00:0c

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

3(pi-vethab403f0b): addr:d6:54:69:f8:0d:08

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

4(pi-veth4773cb0d): addr:e2:11:dc:62:b1:73

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

5(pi-veth0b29d1a4): addr:96:05:ea:27:38:71

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

6(vxlan0): addr:4e:54:ab:d0:bf:e2

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

7(pi-veth703dc804): addr:3e:09:2b:1b:19:53

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

LOCAL(br-int): addr:12:f1:a4:0a:a6:4f

config: PORT_DOWN

state: LINK_DOWN

speed: 0 Mbps now, 0 Mbps max

OFPT_GET_CONFIG_REPLY (xid=0x4): frags=normal miss_send_len=0

and just for the sake of having an example, also from br-access:

ovs-ofctl show br-access

OFPT_FEATURES_REPLY (xid=0x2): dpid:0000000000000002

n_tables:254, n_buffers:0

capabilities: FLOW_STATS TABLE_STATS PORT_STATS QUEUE_STATS ARP_MATCH_IP

actions: output enqueue set_vlan_vid set_vlan_pcp strip_vlan mod_dl_src mod_dl_dst mod_nw_src mod_nw_dst mod_nw_tos mod_tp_src mod_tp_dst

1(vethab403f0b): addr:86:59:3e:ca:d6:0d

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

2(pa-veth703dc804): addr:06:a5:10:ad:1e:02

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

3(veth4773cb0d): addr:82:1f:9b:65:98:8e

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

4(veth3de769f4): addr:2a:ca:fb:0e:b0:47

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

5(veth703dc804): addr:c2:3d:35:da:63:f0

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

6(veth0b29d1a4): addr:5e:90:e6:e9:dd:b7

config: 0

state: 0

current: 10GB-FD COPPER

speed: 10000 Mbps now, 0 Mbps max

7(pa-veth4773cb0d): addr:62:03:b9:64:29:60

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

8(pa-veth3de769f4): addr:2a:9a:85:36:c8:c1

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

9(pa-vethab403f0b): addr:96:58:59:e7:d3:28

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

10(pa-veth0b29d1a4): addr:52:d8:fe:d9:4b:dc

config: 0

state: 0

speed: 0 Mbps now, 0 Mbps max

LOCAL(br-access): addr:32:07:f3:e1:48:46

config: PORT_DOWN

state: LINK_DOWN

speed: 0 Mbps now, 0 Mbps max

OFPT_GET_CONFIG_REPLY (xid=0x4): frags=normal miss_send_len=0

ovs-ofctl dump-flows br-access -O OpenFlow13

same for br-int. Here we see the rules programmed inside the software switch OpenvSwitch that runs on each Openshift Node (virtual - masters/infra and physical - workers). These rules tell what to do with traffic as it enters a port based on match criteria, manipulation of headers, changing values or even dropping it if firewalling. We can use in_port=<port id from above to match some rules for example that apply only when let’s say traffic comes from the veth of a POD and the veth OVS port number is in the output of the command from before.

Worth mention is that Openshift (K8S also) by default implements firewall rules via kube-proxy using iptables rules. You will still see those rules if you do on a > Node: “iptables -L -nv” but no counter will match, thus showing that packets are not entering them but are rather being picked up by OpenvSwitch and processed there.

cookie=0x0, duration=71512.674s, table=0, n_packets=534443, n_bytes=104704310, priority=100,in_port=vethab403f0b,vlan_tci=0x0000/0x1fff actions=load:0x5->NXM_NX_REG6[],load:0x1->NXM_NX_REG0[],load:0x9->NXM_NX_REG7[],goto_table:2

cookie=0x0, duration=71512.674s, table=0, n_packets=1861703, n_bytes=622687676, priority=100,in_port=veth3de769f4,vlan_tci=0x0000/0x1fff actions=load:0x4->NXM_NX_REG6[],load:0x1->NXM_NX_REG0[],load:0x8->NXM_NX_REG7[],goto_table:2

cookie=0x0, duration=71512.674s, table=0, n_packets=623591, n_bytes=128342169, priority=100,in_port=veth703dc804,vlan_tci=0x0000/0x1fff actions=load:0x3->NXM_NX_REG6[],load:0x1->NXM_NX_REG0[],load:0x2->NXM_NX_REG7[],goto_table:2

cookie=0x0, duration=71512.674s, table=0, n_packets=85437, n_bytes=27350255, priority=100,in_port=veth4773cb0d,vlan_tci=0x0000/0x1fff actions=load:0x8->NXM_NX_REG6[],load:0x1->NXM_NX_REG0[],load:0x7->NXM_NX_REG7[],goto_table:2

cookie=0x0, duration=71512.674s, table=0, n_packets=206602, n_bytes=53535014, priority=100,in_port=veth0b29d1a4,vlan_tci=0x0000/0x1fff actions=load:0x2-

...

the list goes on..there are also multiple tables of RULES that a flow goes through

among them also the ARP reply "if" a Request comes for the SVC Subnet..the PBR one for the VIP on ACI redirect to Nodes/OVS

this is meant such that ACI learns of remote endpoints in the shadow BD as being mapped to a specific Node ..via ARP...and then redirecting traffic to them will work at the forwarding level

This looks a bit cryptic right but I will try to give a small example just to have an idea of how OpenvSwitch works.

> cookie=0x0, duration=71517.963s, table=9, n_packets=0, n_bytes=0, priority=10,icmp6,icmp_type=135,icmp_code=0 actions=goto_table:10

The rule above states:

- this is in table 9 installed (OVS has multiple tables and we can have rules sending traffic for parsing from one table to another; this is made in order to have some structure and avoid having a lot of rules in only one table == hard to read)

- if we have icmp6 (ipv6 icmp) and the type=135 and code=0 then move this traffic for further rule parsing into table 10

- if we look in the output above we will find another rule in table 10 that picks it up and does something more

Some rules have also in_port == INPUT port. This is where normally we either have interface names or numbers (number we previously acquired with the ovs-ofctl show br-int COMMAND)

Test if rp_filter on servers is active and might be causing issues

sysctl -a | grep rp_filter

Disable rp_filter (anti spoof filter one time for a test, until reboot)

for i in /proc/sys/net/ipv4/conf/*/rp_filter ; do echo 0 > "$i"; done

Openshift Nodes do not get an IP on Infra VLAN with the ACI CNI

If having only one interface with DHCP in the servers (the infra vlan one) then set the DHCP client to include the mac address in the identifier option. Otherwise define a dhcp client config file specific for that interface.

[root@master1 ~]# cat /etc/dhcp/dhclient.conf

send dhcp-client-identifier = hardware ;

3PAR

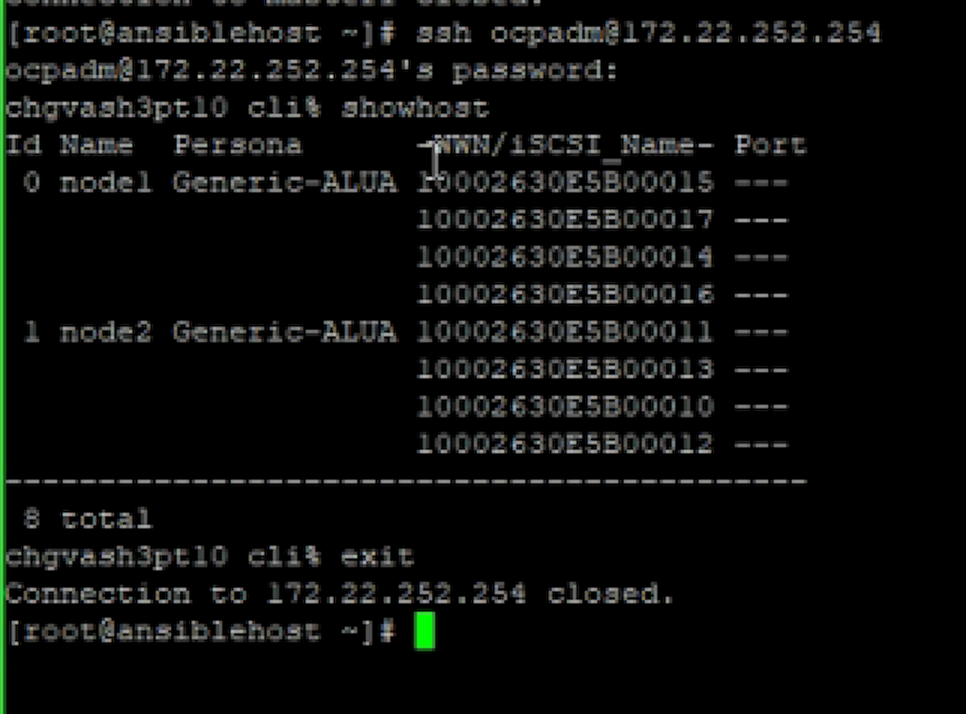

See if on the data channel (Fiber Channel) the Nodes logged into the 3PAR

ssh ocpadm@172.22.252.254

showhost

this of course means you took the WWPNs of the Fiber Channel cards of the servers, cards “hardware emulated” by the HP Synergy Storage Profiles (what decides what network or storage cards a Blade via the backplane sees inside its OS) and mapped them inside the 3PAR Interface / configuration.

Volume Request / Claims

These go over the plugin_container.

#find out where it is running

docker ps | grep plugin_container

docker logs ‚f plugin_container 2>&1 | tee ~/container_logs.txt

Volume Creation - Troubleshoot dory and doryd

Dory = FlexVolume to Docker Volume + plugincontainer + plugin for 3par over FC, mounts also volume inside Container Doryd = Sees the volume request & claim, creates the PV on 3PAR, does mkfs on it

/var/log/dory.log

See if Service is running systemctl status doryd.service

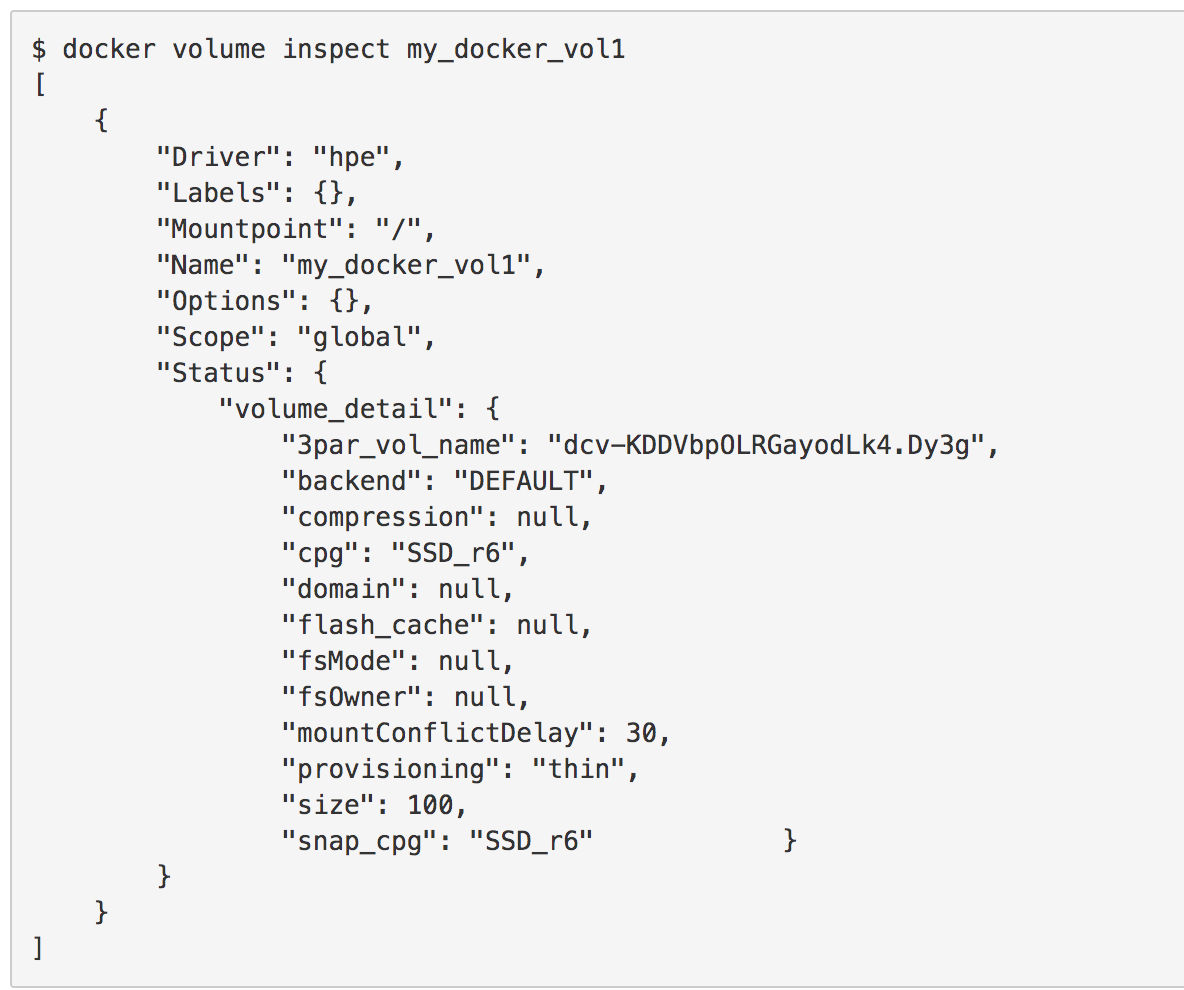

Create volume on 3PAR by hand -> circumvent FlexVolume wrapper, test directly the DockerVolume plugin it converts the request to and calls

As mentioned before there are 2 x containers used by the 3PAR integration: Dory = FlexVolume wrapper..gets the request for a volume from Openshift, converts it to Docker Volume, calls plugin_container with the config for iSCSI or FC DoryD = sees the request, connects via API to 3PAR, creates the volume inside the CPG, does mkfs on it, prepares it to be mounted (back to Dory which handles this)

In this test we skip the wrapper and check directly if the Docker Volume plugin works.

docker volume create ‚d hpe ‚name fc_vol_test ‚o size=1

#mount it in a container

docker run -it -v fc_vol_test:/data1 --rm busybox /bin/sh

See docker volume details after creation

docker volume inspect fc_vol_test

3PAR API tests

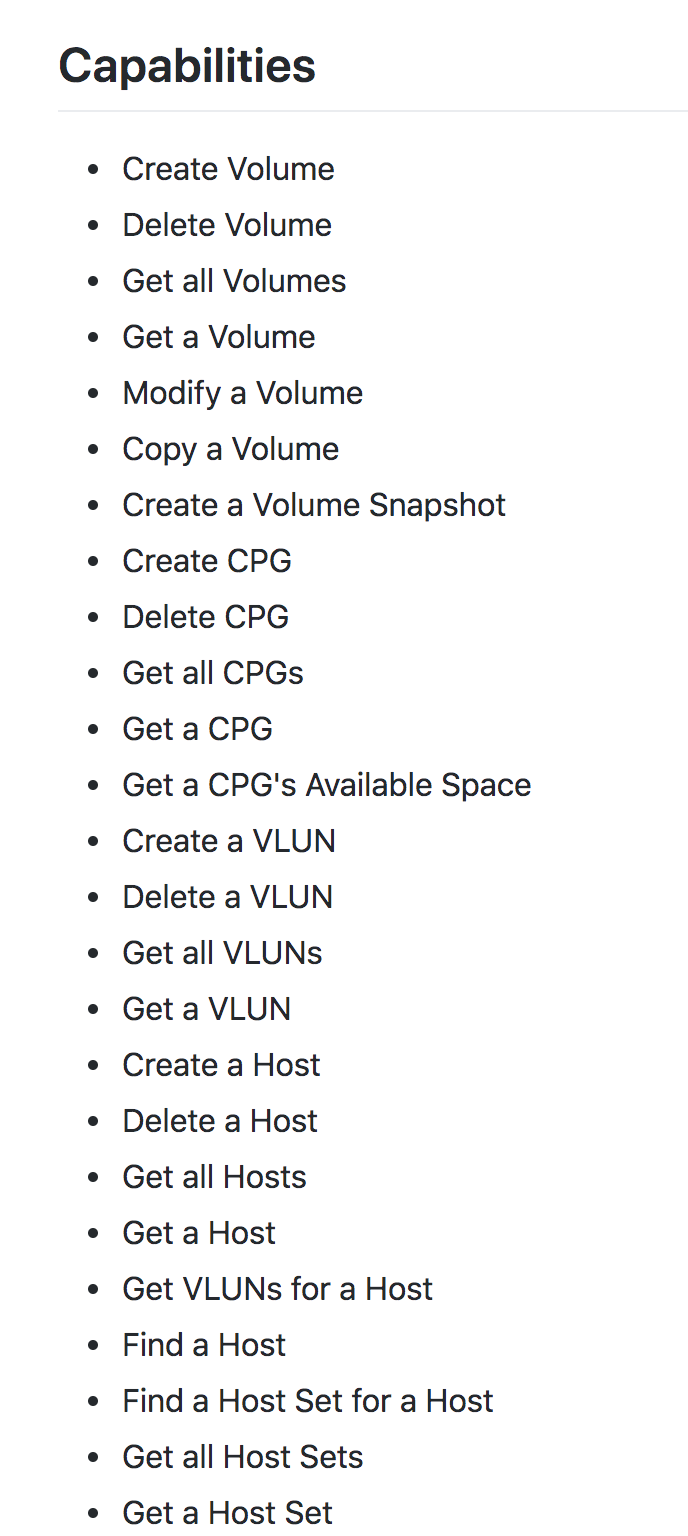

The plugin_container that has then either iSCSI or FC support for mounting the volume from 3PAR uses also an API of course to request the 3PAR to allocate a volume (doryd does the actual volume creation but FlexVolume->plugincontainer does the volume request and mount of it later inside the container) This is done inside the plugin_container via the python_3parclient present in that container in the path: plugin_container:/usr/lib/python3.6/site-packages/python_3parclient-4.2.9-py3.6.egg You can also connect to that container and test API calls by yourself in Python:

docker exec -it plugin_container sh (or bash)

https://github.com/hpe-storage/python-3parclient

3PAR start StoreServ WSApi

ssh to 3par

startwsapi

setwsapi -http enable

setwsapi -https enable

hgvash3pt10 cli% showwsapi

-Service- -State- -HTTP_State- HTTP_Port -HTTPS_State- HTTPS_Port -Version- -------------API_URL--------------

Enabled Active Disabled 8008 Enabled 8080 1.6.2 https://172.22.252.254:8080/api/v1

chgvash3pt10 cli%

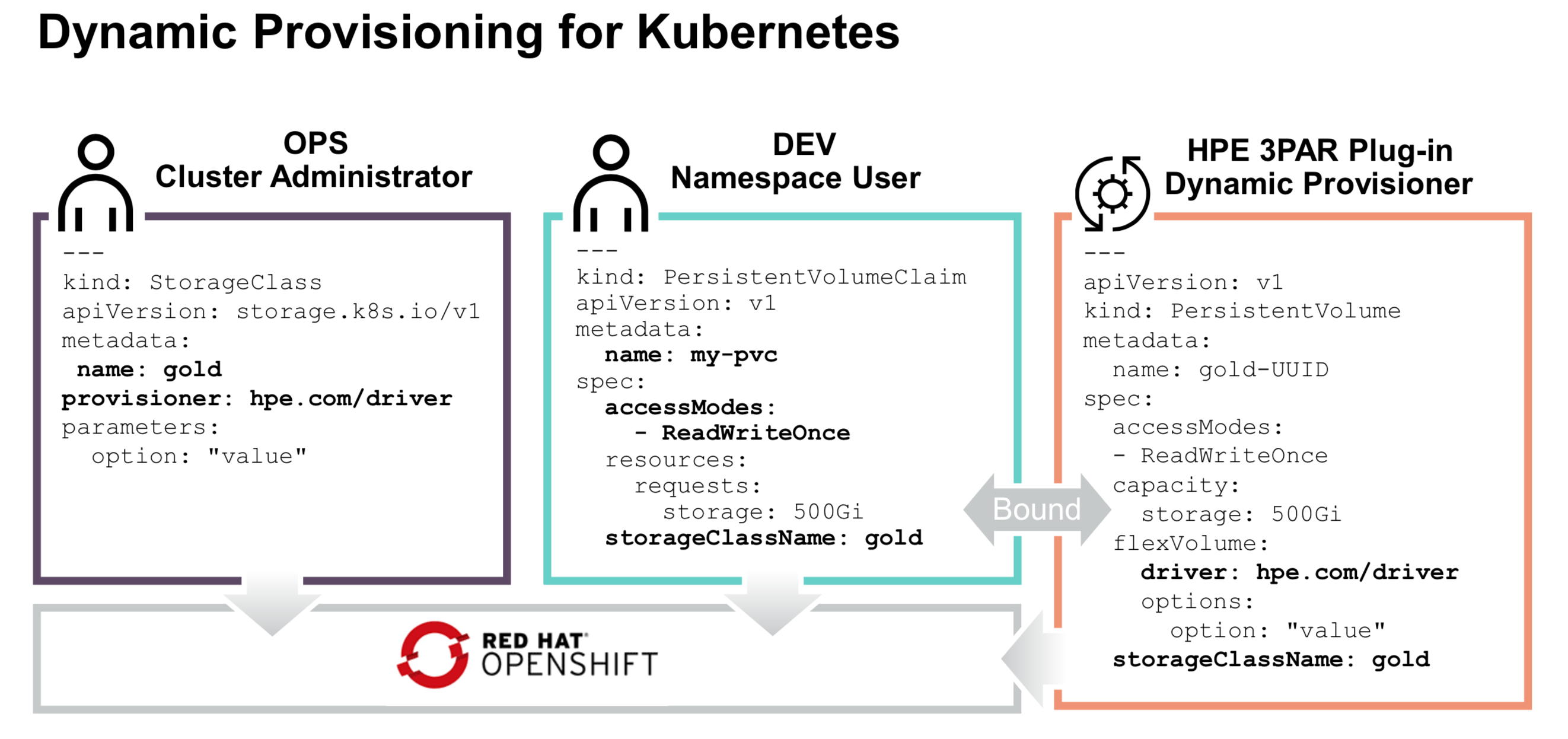

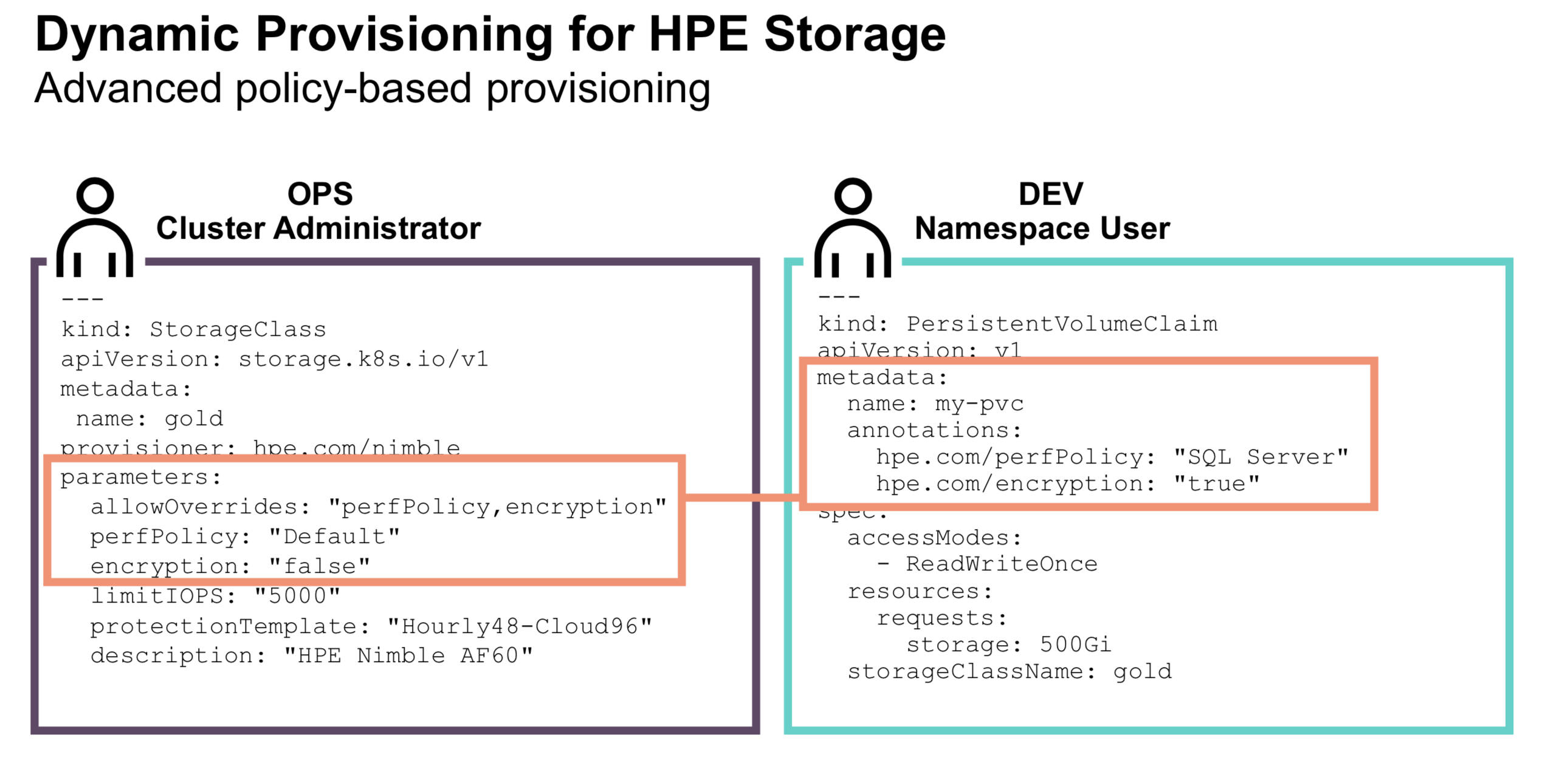

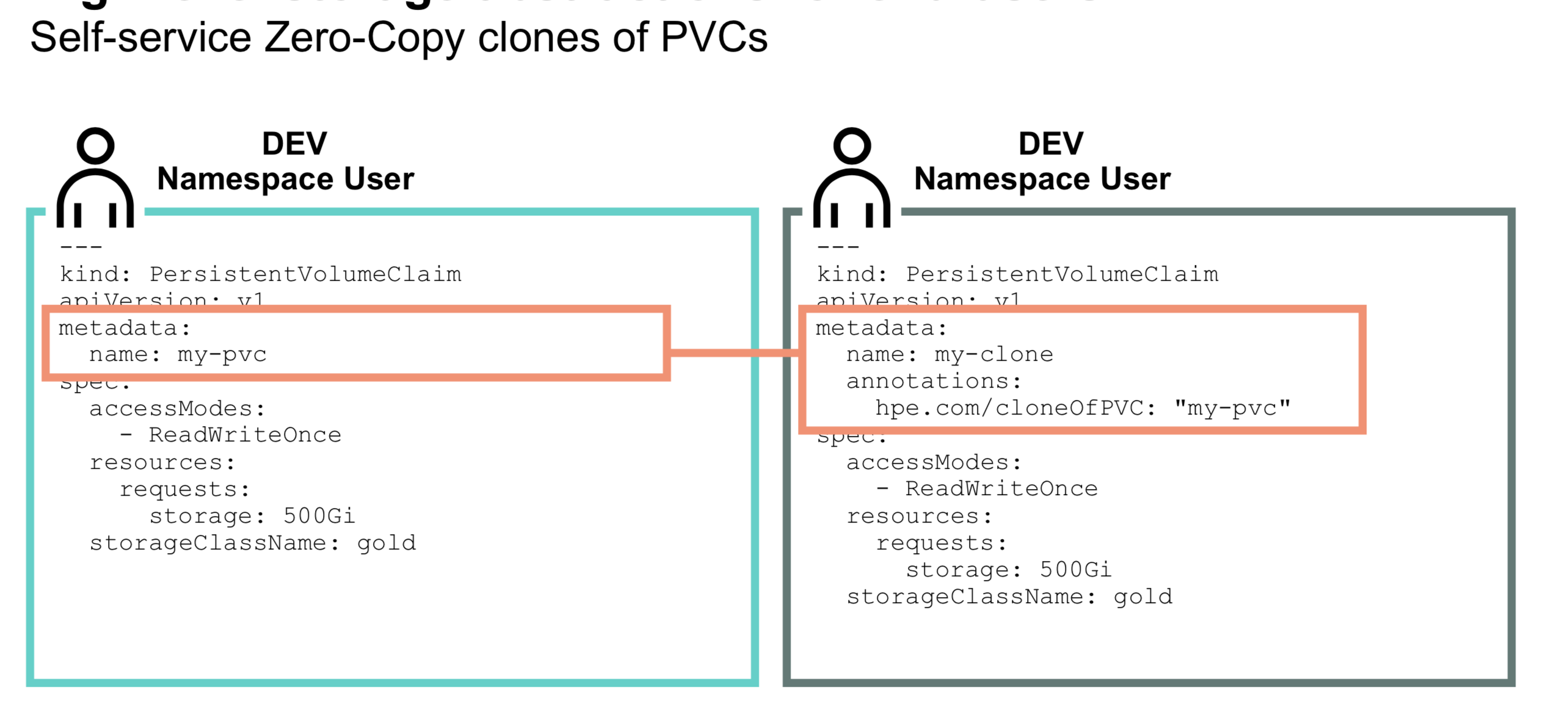

3PAR (yaml) - create storage class, volume, volume claim

In 3PAR admin (via ssh) we have already defined the CPG (group of chunks of data from logical disks that are then bound to physical disks). This CPG is then also set as default in the Dory (FlexVolume) plugin on the Openshift Masters. This means if we create a Storage Class, then automatically it will be a 1:1 relationship to this CPG, unless we specify another in the storage class creation file. Later we can do a volume request which asks for example “give me a 1G volume from " Storage Class…. The PVC = volume claim asks “I am a POD/container, please allocate to me this volume.”

3PAR CPG <–> Openshift Storage Class <–> Volume <–> Volume Claim by a POD/container

Create Storage Class - sc1

oc apply -f

---

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: sc1

provisioner: hpe.com/hpe

parameters:

size: "16"

Create Volume Claims - against storage class sc1

We chose to make two with different sizes.

oc apply -f

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc1

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 22Gi

storageClassName: sc1

---

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc2

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

storageClassName: sc1

We have now: pvc1 and pvc2.

Create just a volume, unclaimed by anyone

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv-demo

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

flexVolume:

driver: hpe.com/hpe

options:

size: "10"

How 3PAR FlexVolume to DockerVolume plugin via their plugincontainer is initiated

Docker-compose builds a container out of this and brings it up.

hpedockerplugin:

image: nilangekarswapnil/legacy:rogerpoc

container_name: plugin_container

net: host

privileged: true

volumes:

- /dev:/dev

- /run/lock:/run/lock

- /var/lib:/var/lib

- /var/run/docker/plugins:/var/run/docker/plugins:rw

- /etc:/etc

- /root/.ssh:/root/.ssh

- /sys:/sys

- /root/plugin/certs:/root/plugin/certs

- /sbin/iscsiadm:/sbin/ia

- /lib/modules:/lib/modules

- /lib64:/lib64

- /var/run/docker.sock:/var/run/docker.sock

- /opt/hpe/data:/opt/hpe/data:rshared