AVI Load Balancer Scaling VIP with Contrail

A current customer test desired to see how well the AVI Load Balancer scales and as such they used Ixia BreakingPoint to determine the behavior. The point was to see when exactly the AVI Controller tells Openstack to spawn a new Instance of an AVI SE (Avi Load Balancer VM that holds a VIP and pool members reachable behind it) and how this process goes.

Following setups were tested:

- AVI LB VMs doing BGP (BGPaaS) with the Contrail vRouter and announcing VIPs

- AVI Controller being configured to spawn AVI SEs / LB VMs but using the inbuilt ECMP/AAP features of Contrail (yes, if you are asking yourselves, the VIP does not have to be from the same subnet as the AVI LB directly connected one to the vRouter)

- AVI LB VMs doing BGP Multihop with the SDN GW inside a VRF (this means that the SDN GW learns the prefixes and reuses the same LSP/label for transport/VPN as it already has for the directly connected IP of the AVI LB VM that originated the prefix)

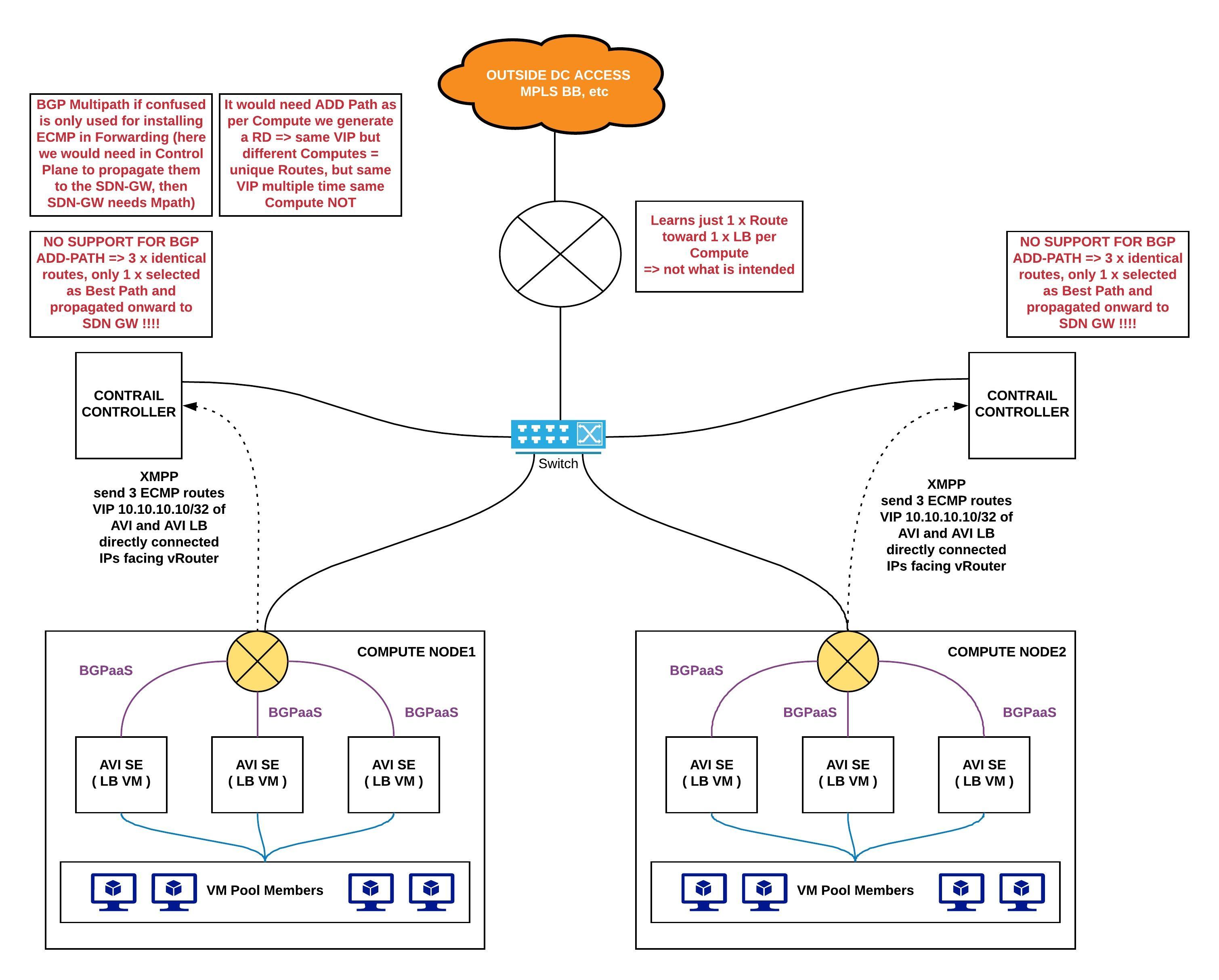

BGPaaS

This one did not really work as initially expected. Contrail originates based on Compute Node a unique VPNv4 Route Distinguisher. This means that when:

- 3 AVI LB VMs

- behind same Compute

- announce same VIP

then the Contrail Controller sees 3 identical routes and it learns just one which becomes the Best Path. In order to have accepted all 3 in the RIB (as the Controller does not forwarding) in would have needed BGP AddPath (currently not supported). Then if that would have been out of the way, then the SDN GW would have required BGP Multipath == install in FIB all learned paths. Of course propagating all those 3 routes onward to a Route Reflector in the backbone would again have required AddPath on the SDN GW as well (you have to think of these two features as Control Plane vs Forwarding Plane = Add Path vs Multipath).

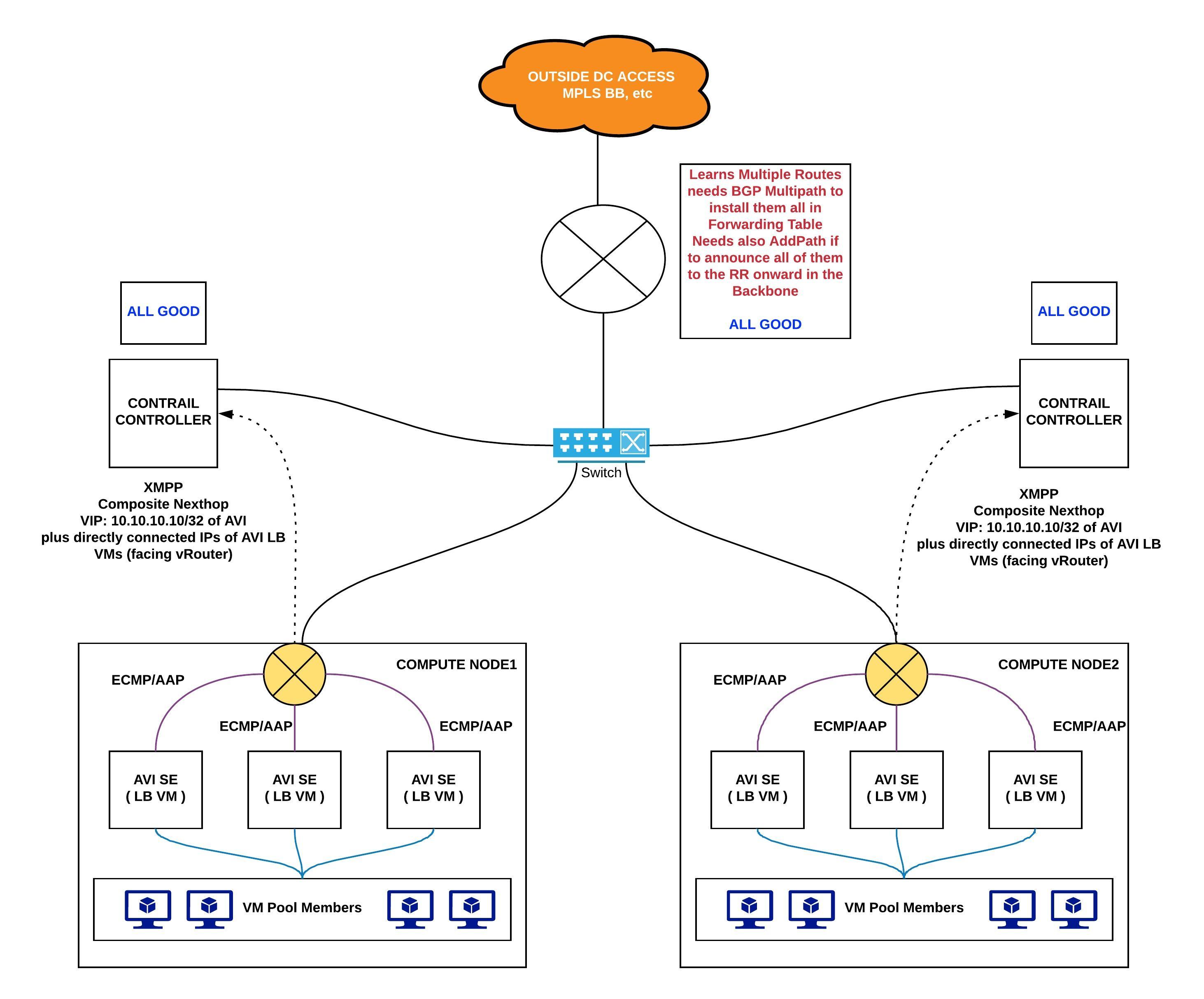

ECMP/AAP

This one worked like a charm. AVI Controller takes care of everything and speaks to Contrail so you do not have to configure much. Just check when you are configuring a Virtual Service in it that AAP is enabled and proceed with your VIP. The Contrail vRouter will learn that VIP, regardless of whether it is in the same subnet as the one it has directly connected to the AVI VM or not. If multiple routes to the same VIP inside the same Compute, then it just creates an ECMP route at that level and propagates outside one route. Traffic will be load-balanced though despite this but of course you have less control over how it is distributed or can reach some asymmetry (which might break applications that are stateful and require both ways to come via the same LB but this problem is not a Contrail one, rather a generic application use case that has to be studied carefully before deploying).

BGP Multihop with SDN GW

This is basically an iteration of the first scenario with the difference being that we peer Multihop, AVI VM directly to the SDN GW with a Loopback placed inside our VRF. That means we move the BGP Add-Path requirement to the SDN GW. That one will need it for learning and propagating to a Backbone Route Reflector multiple routes. For forwarding traffic over those equal cost paths it will need BGP Multipath. This means if you activate this two features, then you are on the safe side. I worried initially that the vRouter might drop return traffic but upon testing and a second thought…the vRouter is just doing MPLS Switching. The VIP is learning inside a VRF with NH = AVI LB VM directly connected IP facing vRouter. To those directly connected IPs we have LSPs built. The return traffic to the VIPs will just use the same MPLS labels as those directly connected IPs of the AVI SEs.