AVI Load Balancer & Contrail Integration

Recently I had the chance to play in a lab with a Load Balancer manufacturer for the Cloud that I had no clue about before and which proved to be a challenging but also rewarding experience. I’m talking about AVI Load Balancer (www.avinetworks.com) and this article will walk you through the basic concepts of it, how to integrate it with Contrail, how to see what it does in Contrail and how it provisions the VIPs and also what potential tips&tricks and shortcomings might be.

Caveat before we start

AVI relies heavily on having DHCP enabled in Openstack so if you are planning to disable DHCP (although one can make static mapping/allocations in it), then you will face a few key issues.

- AVI Controller requires that you set a password to allow you to login to it BOTH as root (CLI) and via the web interface. This CAN ONLY BE SET WITH NEWER VERSIONS using the web interface and its default credentials => you need that the device gets an IP or otherwise you can’t access the WEB UI

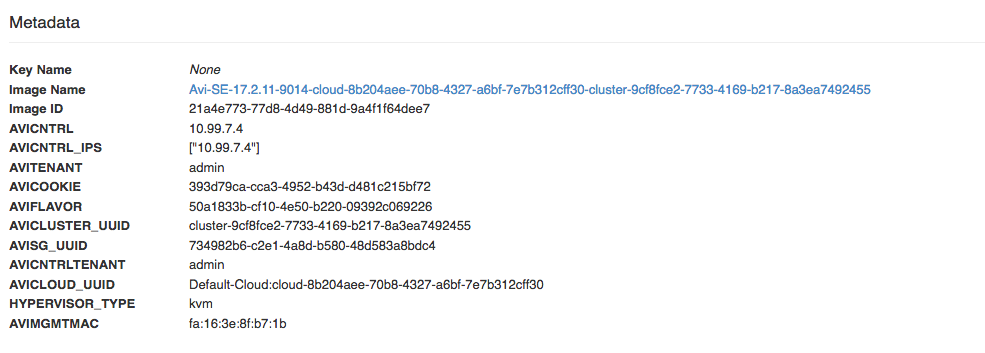

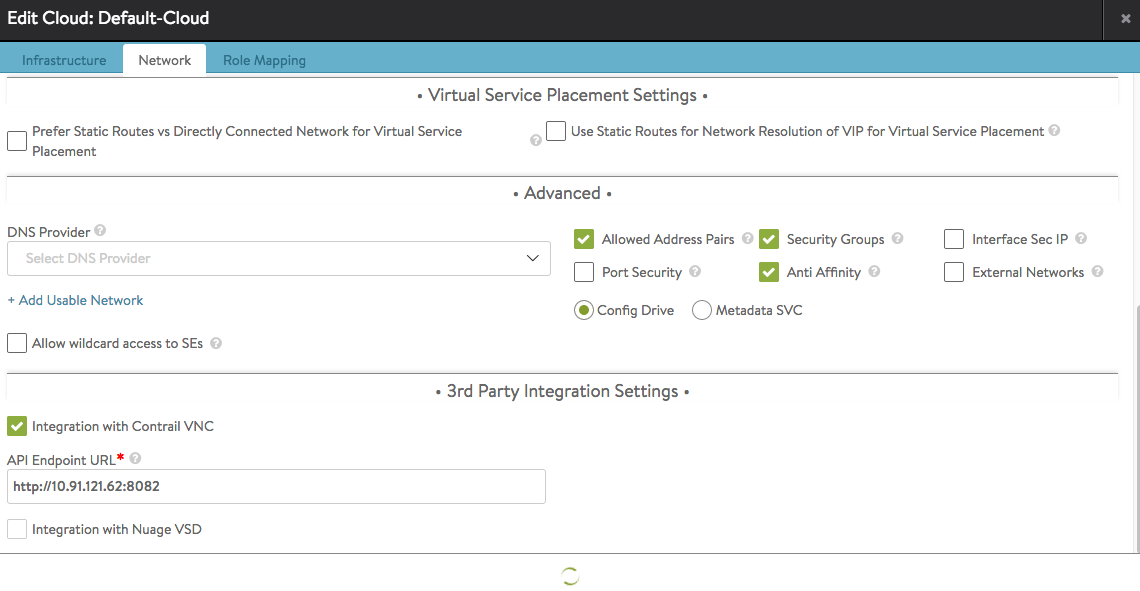

- AVI SEs also require DHCP as they boot on the Management Network and then via an Openstack pre-provisioned by the Controller Metadata Variable (AVICNTRL_IPS=[“IP CTRL”], AVICNTRL=“ip|hostname”) connect to the Controller for fetching what Virtual Services need to be configured on them. Without initial network connectivity, they will not be provisioned and will act like a ghost :) There is also an option to switch from Metadata to Config Drive for the SE inside the Infrastructure -> Cloud settings menu.

Introduction about AVI

What is AVI, short comparison with F5, principle of working

Well, AVI is a load balancer for the Cloud that can work easily with one of the following:

- VMWare

- Openstack

- Amazon

- Mesos

- Linux servers

- Openshift / Kubernetes

- Microsoft Azure

It comes with the already classic principle of Controller which then in turn spawns Service Engines. A Service Engine is in normal language, a Load Balancer instance.

How it does this:

- AVI Controller calls Openstack to spawn a new VM (Service Engine) and provisions also metadata for it.

- Service Engine is spawned with several metadata sent variables among which the most important are:

- AVICNTRL= “hostname/IP of the Controller”

- AVICNTRL_IPS= “array of IPs of Controller(s)”

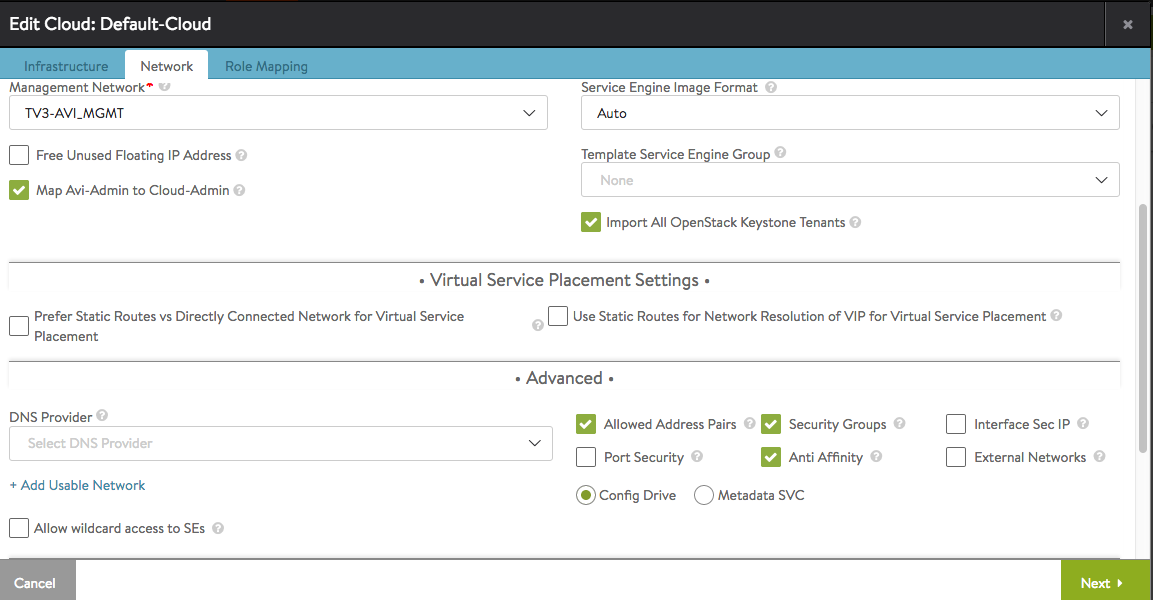

- One can select from Infrastructure -> Cloud -> edit also to use a Config Drive instead of Metadata vars

or select from AVI -> Infrastructure -> Clouds to use Config Drive:

If because of a bug or some other problem the SERVICE ENGINES are spawned without having those variables defined, then they will have no way of figuring out who the Controller is and as such will not get their provisioning information (which virtual services have to be configured and initialized on them). A virtual service = a load balancer service, e.g. a web service with a VIP and a pool of web servers behind it.

What does AVI use for having overlapping routing tables and information (management, virtual services) ?

That’s a simple question, they chose something already implemented in Linux and that is: “namespaces”. MANAGEMENT -> HOST OS Routing Table Virtual Services -> Linux Namespaces called avi_netns[1..x] For pool member networks despite getting a default GW from DHCP it seems the AVI SEs are smart enough to ignore it and not install it (it would overlap with the VIP network / External Network Default GW).

AVI deployment models inside Openstack

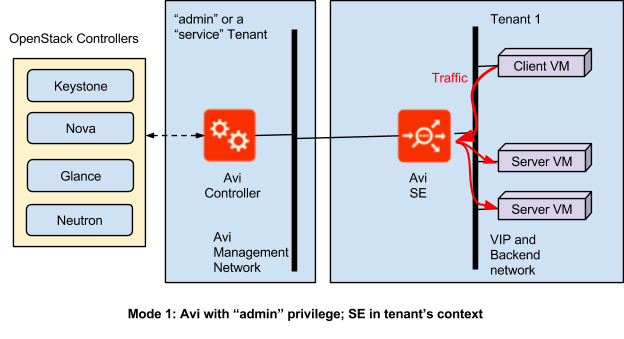

What this refers to is how we can put AVI Controller/AVI SEs inside Openstack (same tenant, different tenants -> controller = admin, SEs = customer tenants). Following information is taken from the AVI website, specifically from here: [AVI deployment possibilities] (https://avinetworks.com/docs/18.1/ref-arch-openstack/)

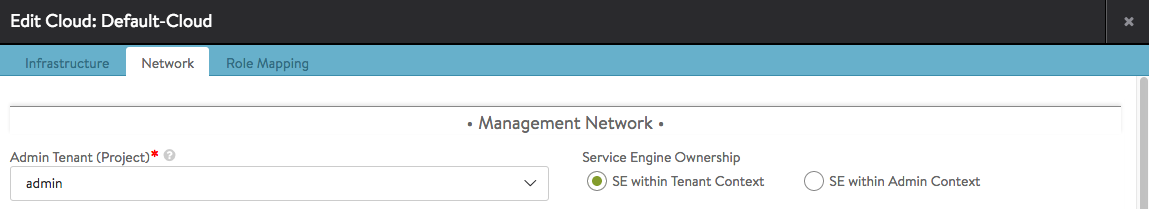

Settings can be found in AVI Controller -> Infrastructure -> Cloud

Mode 1: Avi with “admin” privilege; SE in tenant’s context

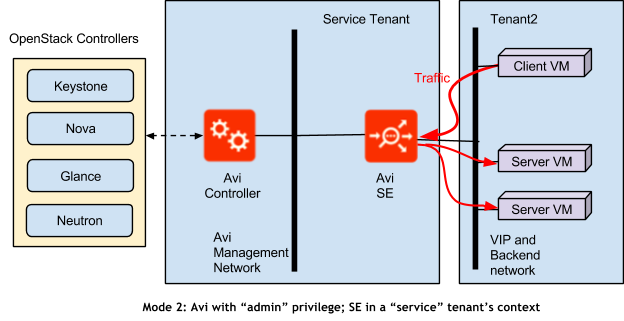

Mode 2: Avi with “admin” privilege; SE in a “service” tenant’s context

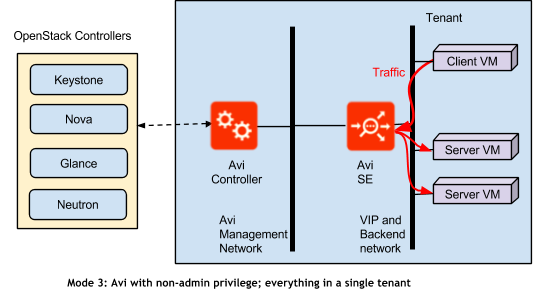

Mode 3: Avi with non-admin privilege; everything in a single tenant

Where I see a bit of complexity?

Controller HA setup while not bad tends to reinvent the wheel a bit in an era where there are already HA solutions present in Linux and stable since years. On their webpage they state at some point that they sent encrypted keepalives between the 3 x AVI Controller HA nodes (typical redundant setup). In reality I have seen, given Controller A, B and C that:

- A –> SSH connection plus a range of Ports Forwarded over it to –> B

- B –> same to C

- C –> same to A then a daemon sends keepalives over local forwarded ports to the other daemons running on the other nodes.

How does it compare to F5

My knowledge is not that advanced in both subjects but so far I spotted a few things.

| AVI | F5 |

|---|---|

| cloud based from the beginning, VIPs announced via BGP or as aliases via OSP AAP (allowed address pairs) | in the past not a straighforward integration but rather spawning instances by hand (there was no controller, just GTM but then the cost was going up |

| DNS and BGP based LB | DNS and BGP based LB |

| BGP Quagga daemon | BGP Zebra daemon |

| REST API, ansible | REST API + multiple automation possibilities: event-based scripts (iCall), iControl, iApps, ansible |

| Still evolving documentation base, sometimes lacking in-depth tech data | Well developed community and internal forums, easy to find explanations, examples, help |

How does it integrate with Contrail

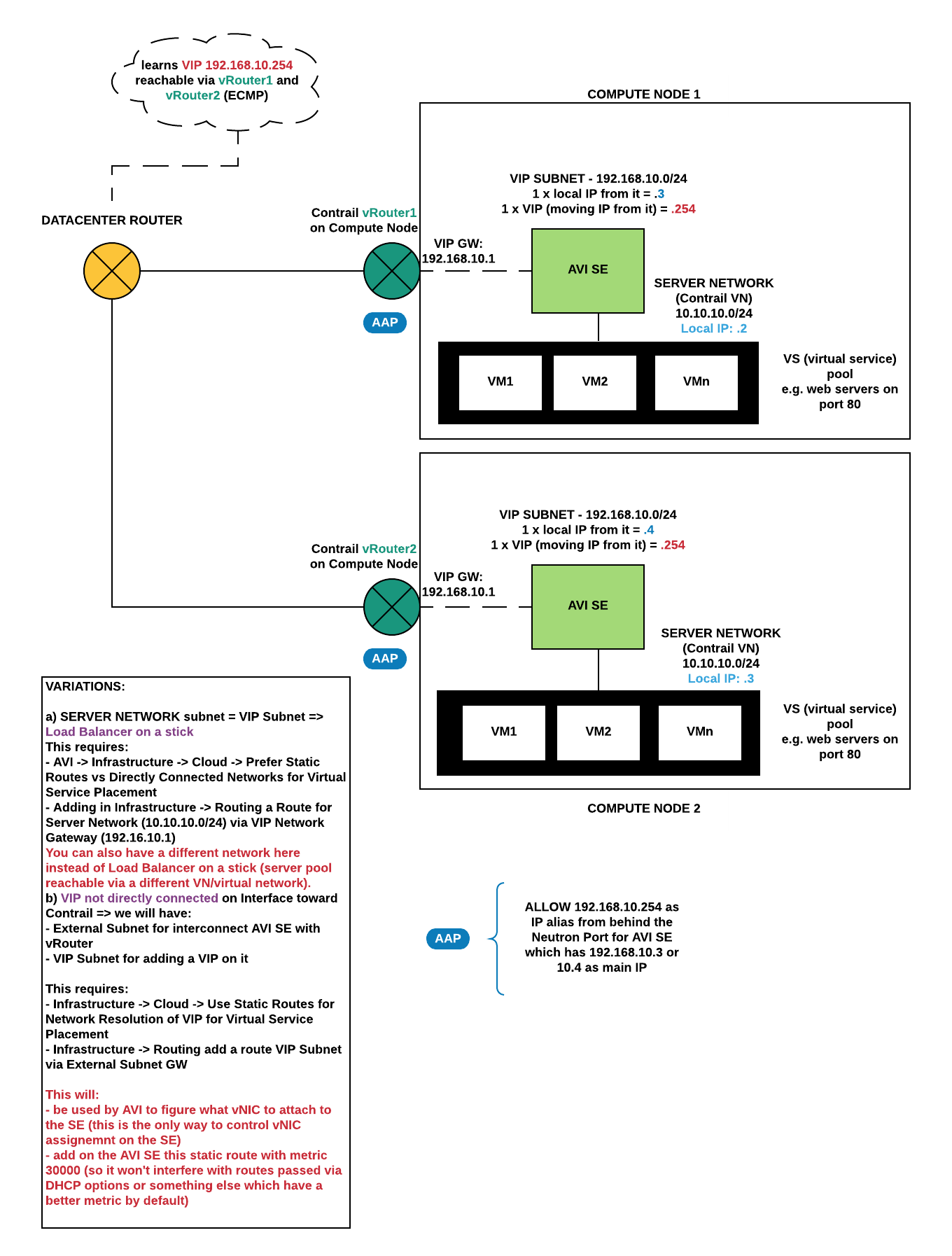

Upon setup it asks for both Openstack URL and Contrail URL which is where it will make requests for spawning Service Engines and for adding networks (virtual networks) to them. In Contrail it will also assign Floating IPs from a predefined pool (created outside AVI) and will create AAP entries for secondary IPs (VIPs) to be allowed by the security mechanisms to appear from behind the Neutron Ports of the Service Engines (Service Engine has a normal IP in a subnet = Neutron Port but behind that “port” traffic will also come sourced from VIP IPs).

How do you initially configure it with Openstack and Contrail

This one is pretty easy and how I think pictures are much simpler to understand, I will put here some samples from the Web UI hosted on the AVI Controller.

If unable to edit any option, then change the Tenant to admin (if not in admin) from the menu in the right-up corner of the screen. These settings you can find later in: Infrastructure -> Clouds

Possible Setups

Openstack has the concept of FIP (floating IP) which is also implemented in Contrail and which is normally a public IP (or an IP for access from the outside) mapped to an internal, Neutron Port == Virtual Machine (or in this case SE = service engine IP). AVI comes with the concept of VIP as well which can be a bit confusing as:

- one can use both a FIP and VIP with traffic coming on a FIP being redirected to a VIP

- one can eliminate the FIP and just import external routes into the VIP network and then “advertise” the VIP:

- via AAP (Contrail would learn that the VIP is visible from 1..N Neutron Ports and allow traffic coming from it from behind them via Allowed Address Pairs; 1..N Neutron Ports = 1 x Port for each SE that is spawned to serve this traffic in a scaleout setup)

- via BGP announcement (each SE that is hosting this VS = virtual service would be announcing this VIP via BGP to the Contrail vRouter, which is the vRouter hosted on the corresponding Compute Node where the SE is; from the Contrail vRouter it would get proxied behind the scenes to one of the Contrail Controller Nodes where the BGP session would in reality get terminated; the rest of the signaling happens as usual to get the VIP from Contrail Controllers to the Datacenter GW via iBGP/eBGP and propagate it further into the backbone of the ISP)

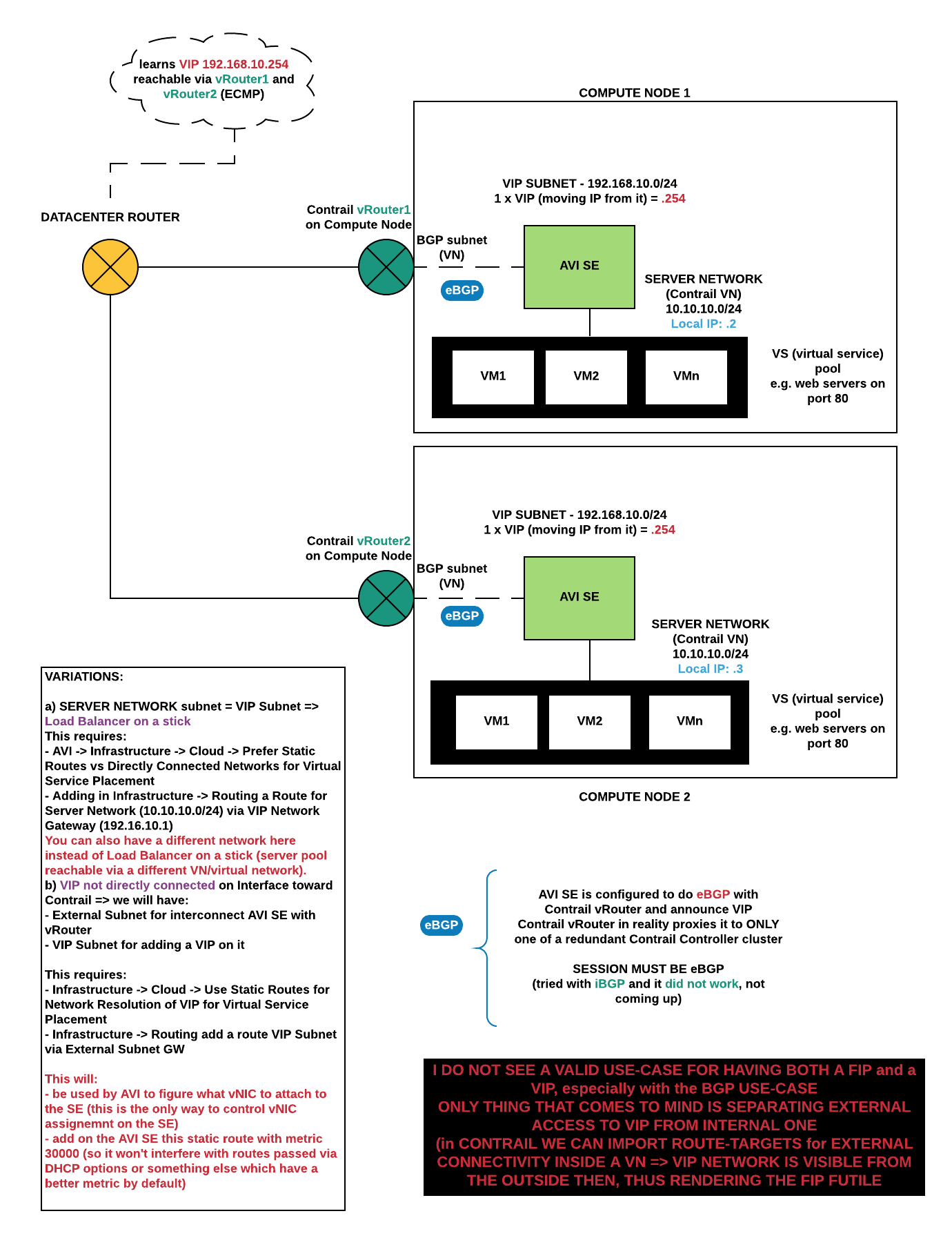

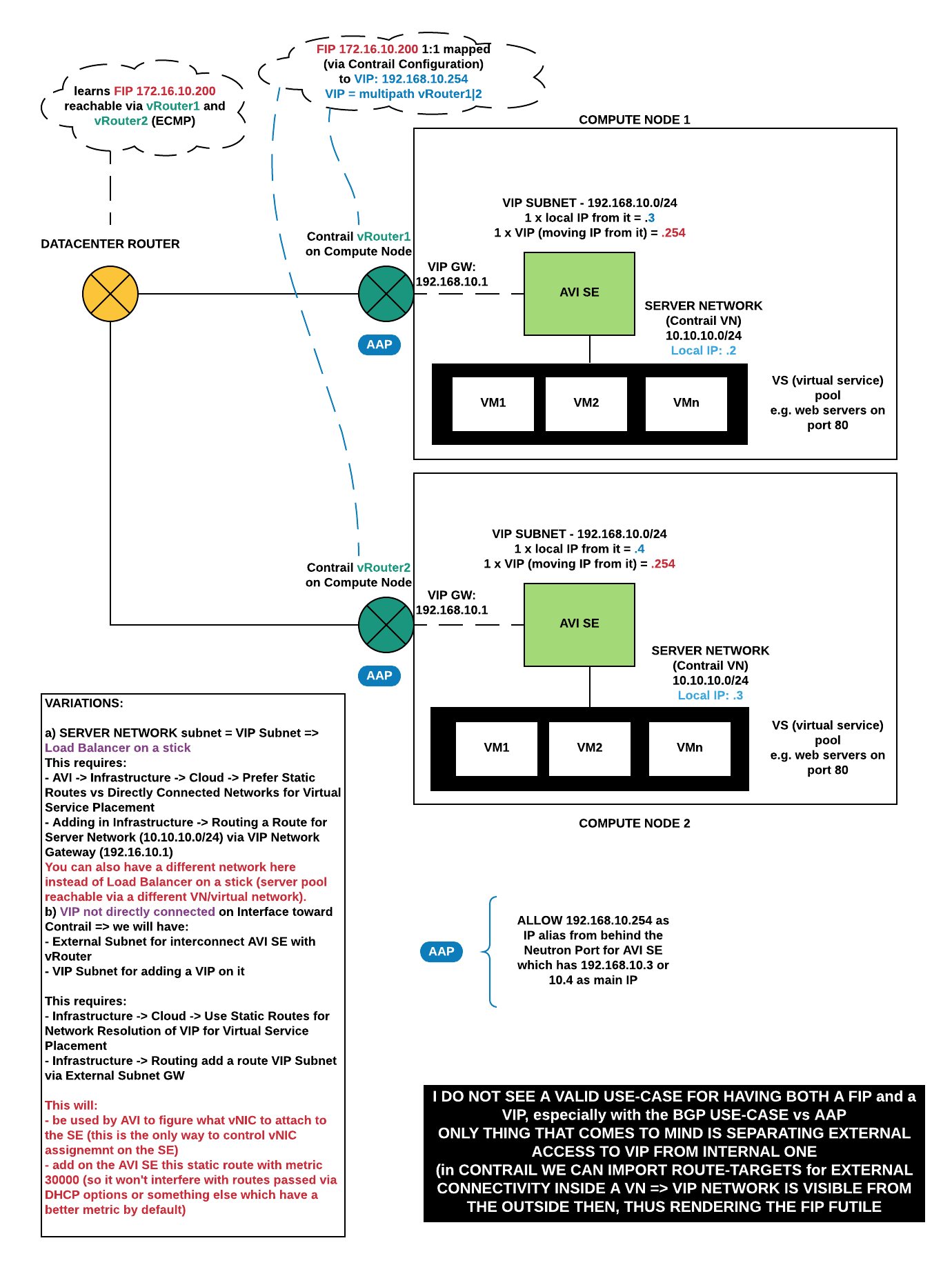

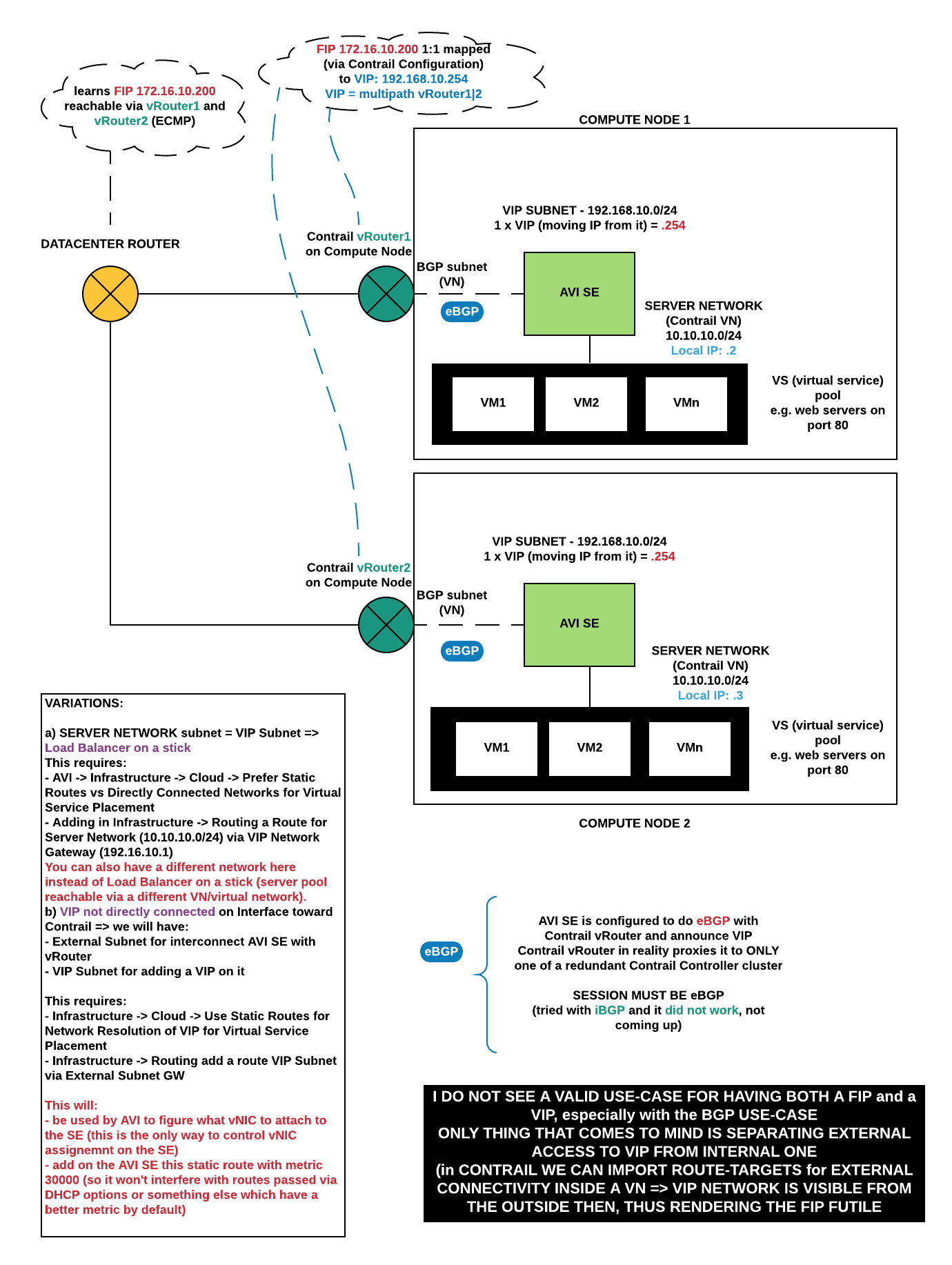

Four pictures to illustrate both principles:

VIP with Allowed Address Pairs

Ending up with:

- vNIC in VIP Subnet (external)

- vNIC in Pool Member Subnet

VIP with BGP

FIP with VIP and Allowed Address Pairs

FIP with VIP and BGP

AVI LB with AAP does not do AUTO SCALING / EMCP by default. One needs to go into the AVI CLI and do:

ssh admin@<AVI-CONTROLLER-IP> shell –tenant <TENANT where you have your SEs> configure virtualservice <YOUR-VIRTUAL-SERVICE-NAME> scaleout_ecmp save #this only modifies the general settings, now we need to trigger it to happen to the existing setup scaleout virtualservice <YOUR-VIRTUAL-SERVICE-NAME> vip_id <ID-VIP, normally 0 or 1>

Configuration

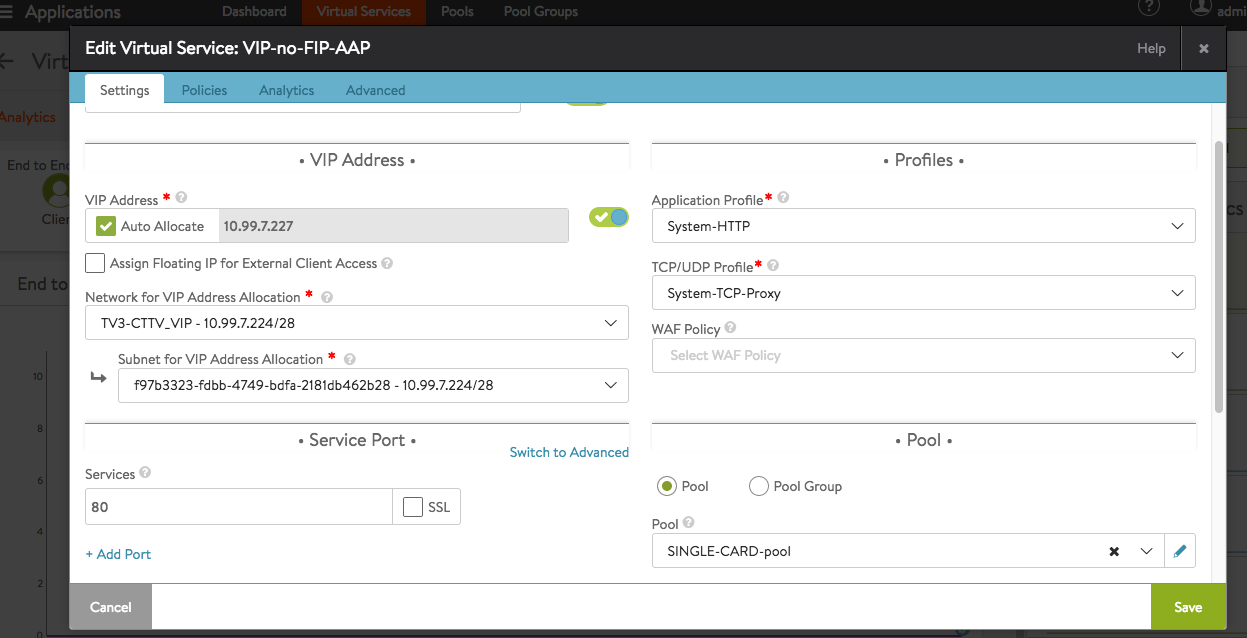

VIP with AAP

This setup is pretty straightforward:

- you need to choose a VIP from a Contrail VN pool (AVI will automatically list all Networks it discovers)

- you create a Server Pool with your Pool Members

- optionally: you specify Network Placement option (in this case auto also works and decides what vNIC in what network the AVI SE should have); to have pool members reachable via another VN (virtual network) you need to enable in Infrastructure -> Cloud the option to use Static Routes for vNIC Placement and add under Routing a corresponding entry (your pool members subnet via GW from another VN).

VIP with BGP

Ending up with:

- vNIC in BGP subnet (external)

- vNIC in Pool Member Subnet

- VIP (from VIP subnet / Contrail VN) announced via BGP

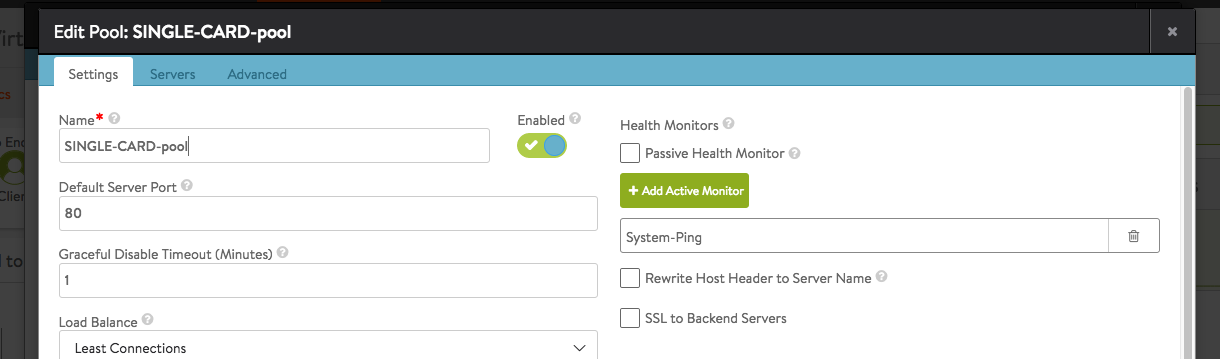

AVI Side

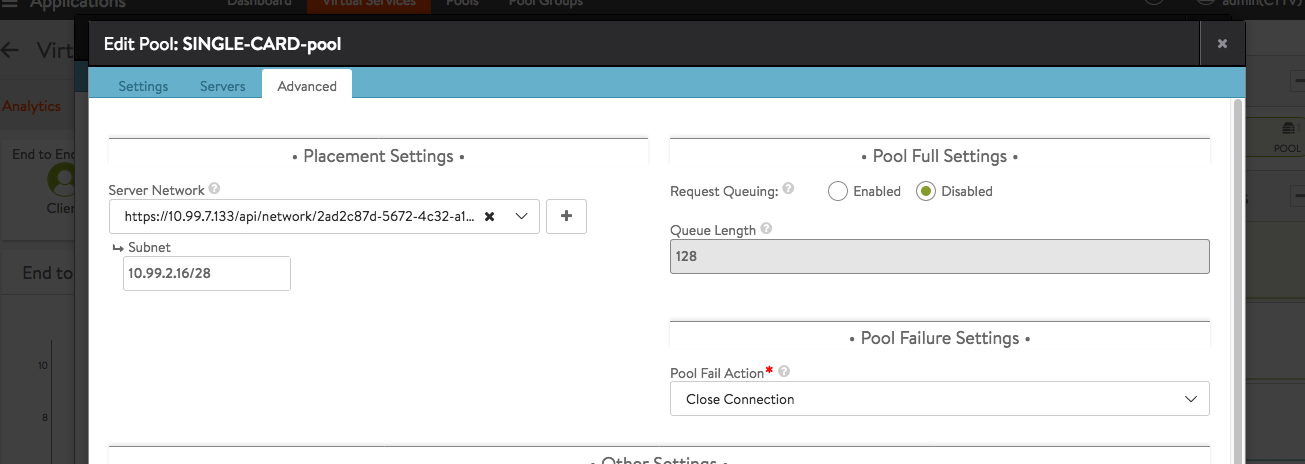

Same setup as before with the only difference being: Settings -> Infrastructure -> Routing, setting up BGP peering

If you see the BGP session not coming up, check if it works without a password. The Web Interface in some versions generates the password for the Quagga configuration wrong (the AVI Shell/CLI though does not have this issue).

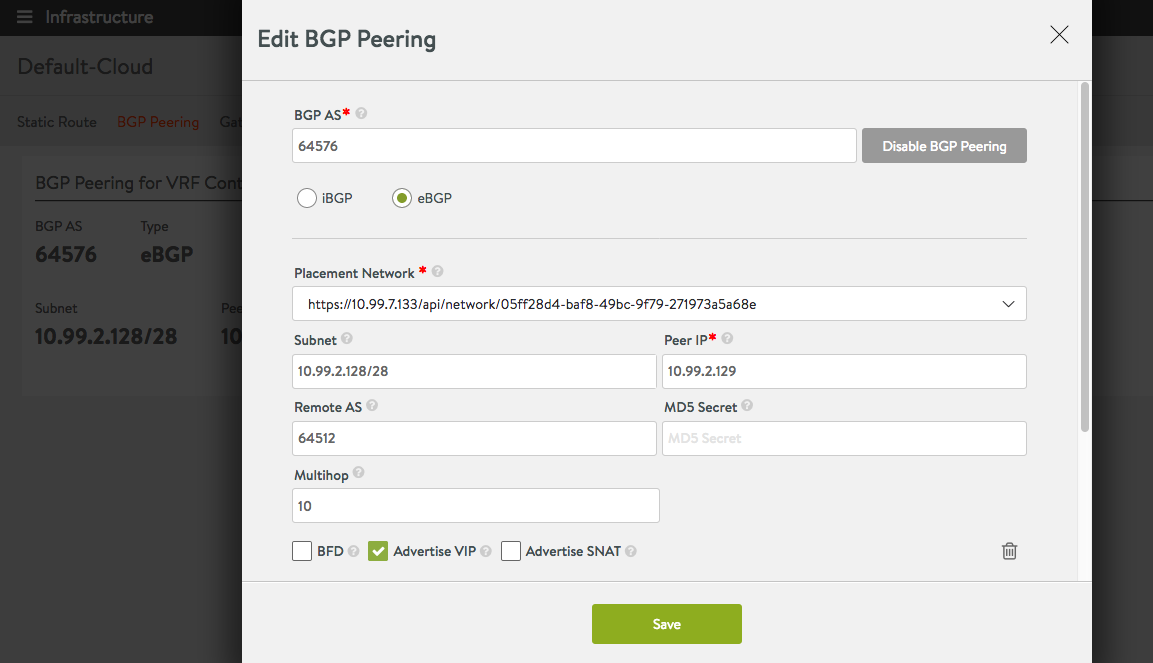

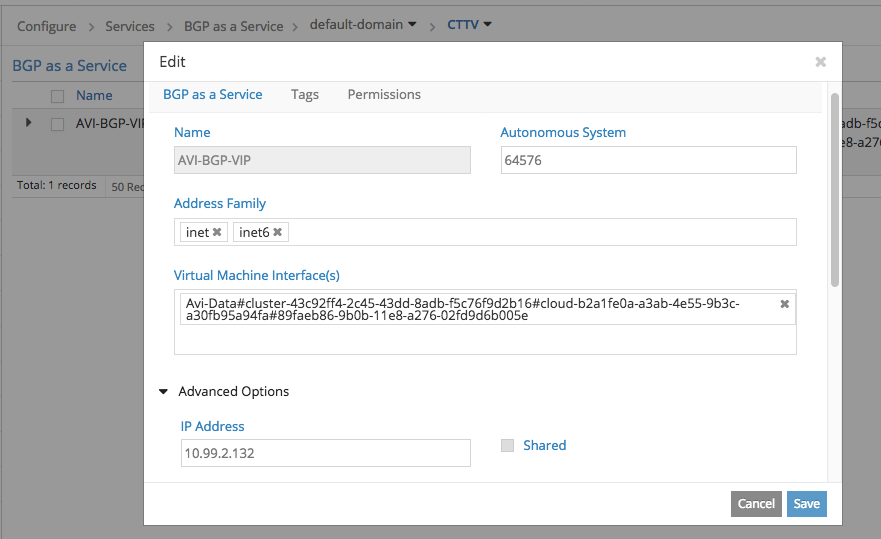

Contrail Side

In Advanced Options I set which IP it should have as BGP Session Destination. This is because AVI will have a local Subnet IP and also the VIP from behind the same Neutron Port (vNIC) assigned to the AVI SE.

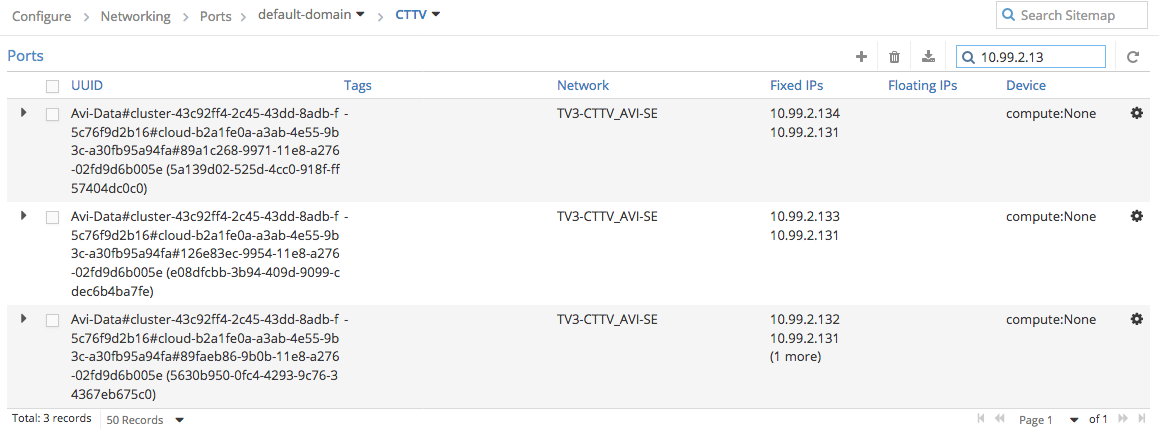

Contrail requires that the Virtual Machine Interface on which to listen for BGP Sessions be specified (what you see there with Avi-Data#… is in fact the Contrail vRouter facing vNIC of an AVI SE). AVI currently does not call the Contrail API to add a new VMI when it does scale out and it spawns a new SE => this process remains a bit manual. It might change as per Feature Request asked to AVI.

VIP with FIP and AAP

Ending up with:

- vNIC in VIP Subnet (external)

- vNIC in Pool Member Subnet

- FIP mapped from Contrail to the VIP, VIP is multipath (number of SEs) => ECMP

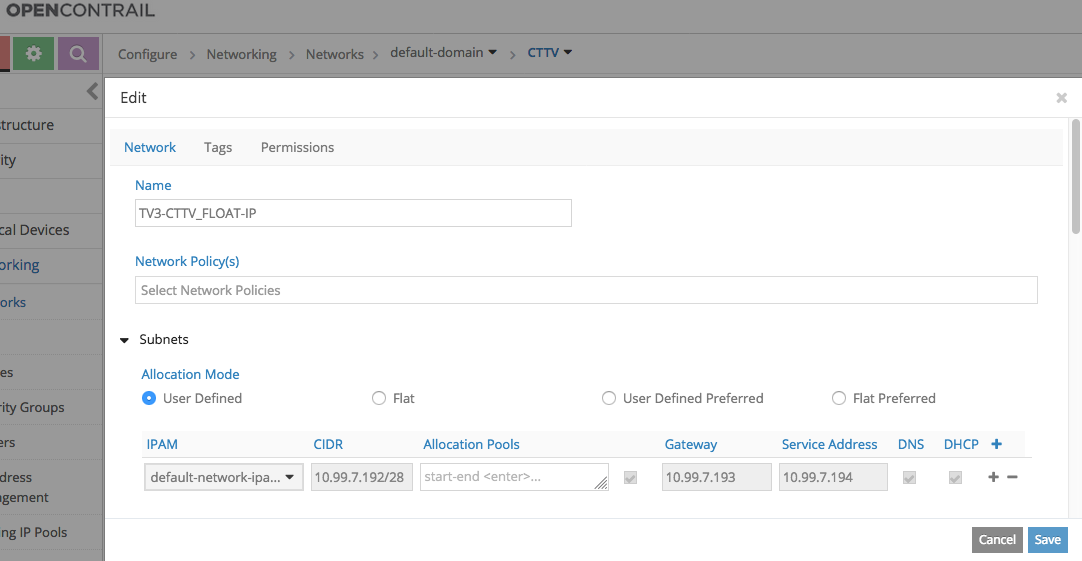

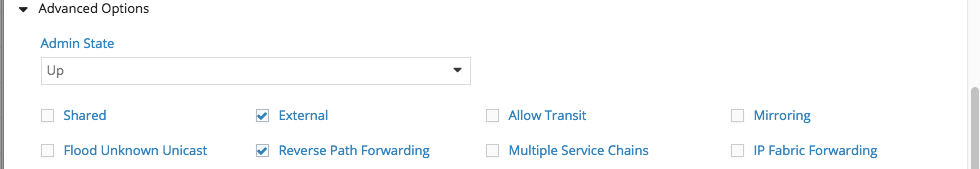

Contrail side

We need to create a Virtual Network (VN) for Floating IPs or reuse an existing one. We need to create a Floating IP Pool = a pool of IPs out of the VN which are dedicated for this purpose

The Floating IP Virtual Network has to be defined as: EXTERNAL or otherwise it will not be SELECTABLE from AVI for FIP Assignment.

AVI side

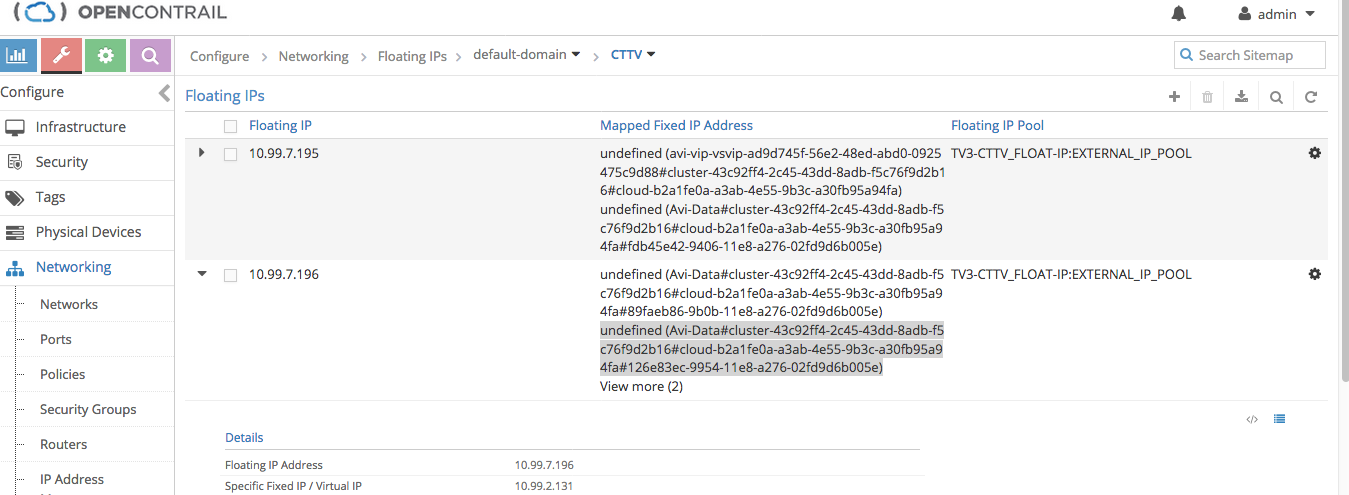

Data:

- VIP: 10.99.2.131

- FIP: 10.99.7.196

- Server Pool: VN with 10.99.2.16/28 subnet and in it a server with IP: 10.99.2.21

VIP with FIP and BGP

Ending up with:

- vNIC in BGP Subnet (external)

- vNIC in Pool Member Subnet

- VIP (VIP Subnet / Contrail VN) announced via BGP

- FIP mapped in Contrail to VIP, VIP is announced from multiple SEs => ECMP

AVI Side

Same setup as before with the only difference being: Settings -> Infrastructure -> Routing, setting up BGP peering

Contrail Side

In Advanced Options I set which IP it should have as BGP Session Destination. This is because AVI will have a local Subnet IP and also the VIP from behind the same Neutron Port (vNIC) assigned to the AVI SE.

Contrail requires that the Virtual Machine Interface on which to listen for BGP Sessions be specified (what you see there with Avi-Data#… is in fact the Contrail vRouter facing vNIC of an AVI SE). AVI currently does not call the Contrail API to add a new VMI when it does scale out and it spawns a new SE => this process remains a bit manual. It might change as per Feature Request asked to AVI.

I do not see a good USE CASE for having both a VIP and a FIP with the VIP announced via BGP. It might be useful if the VIP network is only local (imports no routes from outside/external/backbone) and then we announce the VIP for East-West traffic and keep the FIP for North-South.

Extras

VIP not going away with AAP if pool members DOWN

This is as per design for the time being but might change in the future. Even if all pool members are down, one can only enable a feature to not listen to the VS Port anymore when all pool members are down. This effectively just makes that any connection to the VS gets a TCP Reset instead of being accepted. Not very helpful in most setups as the VIP still attracts traffic and triggers a blackhole. One can mitigate this by using GSLB and having redundancy/georedundancy via DNS based methods but this is a bit old-school. Most companies are moving toward anycast BGP or a mix between DNS and anycast BGP (where more geographical areas are concerned worldwide). This is why I find the BGP approach the desired one as then the VIP advertisment DOES go away.

FIP still mapped to VIP even if all pool members are down

Same as the topic before BUT with the mention that the mapping done by AVI in Contrail is never altered once set. This means that once the FIP has been mapped to the VIP it remains like this regardless of pool member health.

Integrating with Contrail for BGP requires eBGP

When configuring BGPaaS with Contrail and trying to make an iBGP session with AVI I noticed it was not coming up and the debug on AVI side was showing that it gets rejected (not at TCP level but rather inside a BGP message).

Conclusion: USE eBGP.

Static Routes / Network Placement

AVI has a different way of looking at things. In F5 one would simply define which networks a virtualized F5 Big-IP should have (which NICs/vNICs). In AVI by default both VIP and Pool Members are going to create SEs with vNICs in those Networks (one vNIC in VIP Network, one vNIC in each Pool Member Network). If you want this to happen differently then you have the following two options:

- VIP in another Network than the one of the “external” vNIC of the SE

- Pool Members reachable via another Network, with the latter configured as a vNIC on the SE

VIP in another Network

Two options required:

- AVI -> Infrastructure -> Cloud -> set “Use Static Routes for Network Resolution of VIP for Virtual Service Placement”

- AVI -> Infrastructure -> Routing -> Static Routes -> Add here a Route like: “VIP Network reachable via VN1 GW” (VN1 = virtual network 1)

What the second line causes:

- on the SE a static route with metric 30000 will be installed (this high metric ensures it will not interfere with anything)

- on the SE a vNIC will be created in VN1 and the VIP will appear as APP there

Pool members reachable via another network

Two options required:

- AVI -> Infrastructure -> Cloud -> set “Prefer Static Routes vs Directly Connected Network for Virtual Service Placement”

- AVI -> Infrastructure -> Routing -> Static Routes -> Add here a Route like: “VIP Network reachable via VN1 GW” (VN1 = virtual network 1)

What the second line causes:

- on the SE a static route with metric 30000 will be installed (this high metric ensures it will not interfere with anything)

- on the SE a vNIC will be created in VN1 and your pool members will be reachable via IT

Troubleshooting

Dumping Traffic on AVI

This has to be done on the main OS and not inside one of the Linux Namespaces. Inside the Linux Namespace of an AVI VS one has interfaces like:

- avi_eth1

- avi_eth2

- …

Here you will see nothing.

root@avi_se_after_doing_sudo_from_admin# ip netns exec avi_ns1 bash # ip ro default via 10.99.0.3 dev avi_eth1 10.99.0.0/28 dev avi_eth1 proto kernel scope link src 10.99.0.13 10.99.0.32/28 dev avi_eth2 proto kernel scope link src 10.99.0.40 10.99.0.48/28 dev avi_eth3 proto kernel scope link src 10.99.0.55 169.254.0.0/16 via 10.99.0.34 dev avi_eth2

tcpdump on an avi_ethX will not show anything, so exit to the main OS and then choose the “same” interface:

tcpdump -n -i eth1

BGP not coming up on AVI

SSH into the SE as admin, then do “sudo”. Go into the corresponding namespace (do an “ip netns” first to see which ones exist).

root@Avi-se-gqnta:~# ip netns exec avi_ns1 bash root@Avi-se-gqnta:~# nc localhost bgpd “password by default is avi123” Avi-se-gqnta> en sh run Current configuration: ! password avi123 log file /var/lib/avi/log/bgp/avi_ns1_bgpd.log

so the log file is the one from above, we activate debug for BGP.

Avi-se-gqnta# debug bgp debug bgp BGP debugging is on

and trace the file

Avi-se-gqnta# exit exit tail -f /var/lib/avi/log/bgp/avi_ns1_bgpd.log

Sometimes the SE does not get provisioned with the proper vNICs after a change in the VS

If you make a change to a VS, like changing the Member Pool for example, you might notice that the vNICs of the SE that hosts it do not get adjusted. I am not sure if this is a glitch but during lab testing I discovered two very basic and primitive ways to force a refresh:

- in AVI, under Application -> Service Engines -> click on Disable, then on Enable for your VS

- Reboot from Openstack the SE concerned

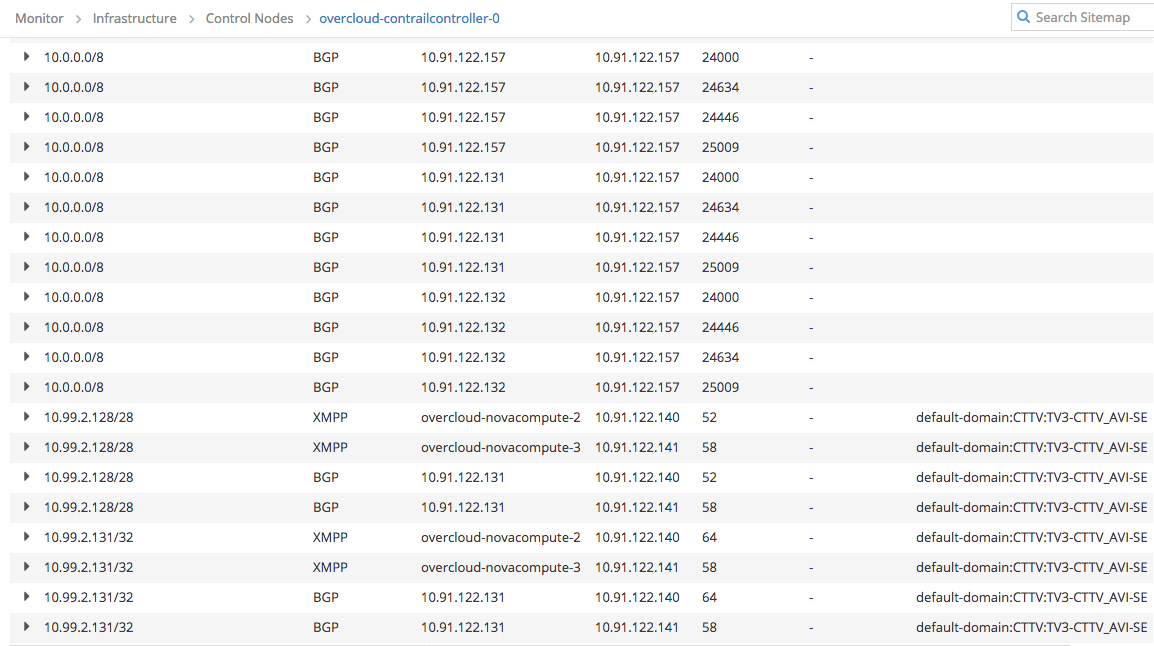

Following the Control Plane and Forwarding plane in Contrail

Control Plane for FIP

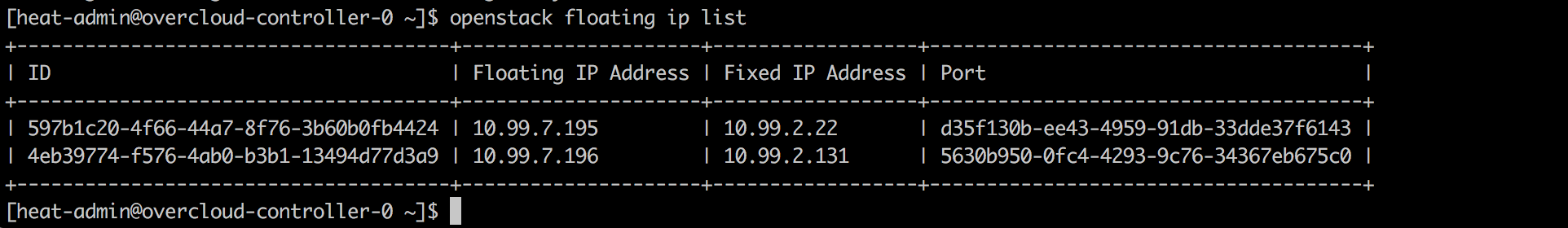

AVI mapped the FIP 10.99.7.196 to VIP 10.99.2.131. We look at how the Contrail Controllers learned the FIP.

Contrail -> Monitor -> Control Nodes -> pick one (if having an HA setup) Click on Routes, then on Expand. Select the Routing Instance (Virtual Network in fact) for the FIP Network. In Prefix put 10.99.7.196/32, click on Search. Result will show at the end this:

which effectively says that 10.99.7.196 = FIP is reachable via 2 x Compute Nodes: 10.91.122.140 and 141. using MPLS labels 58 and 65.Control Plane for VIP

Same operation but inside the VIP Network will reveal.

which effectively says that 10.99.2.131 = VIP is reachable via 2 x Compute Nodes: 10.91.122.140 and 141. using MPLS labels 58 and 64.Data Plane for FIP

So we know that FIP is reachable via MPLS label 58 for Compute-3 and 65 for Compute-2. We ssh into Compute-3 (Compute-2 will follow the same principle so I am not putting it here anymore).

[heat-admin@overcloud-novacompute-3 ~]$ sudo su - [root@overcloud-novacompute-3 ~]# mpls –get 58 MPLS Input Label Map

| Label | Nexthop |

|---|---|

| 58 | 83 |

| [root@overcloud-novacompute-3 ~]# nh –get 83 | |

| Id:83 Type:Encap Fmly: AF_INET Rid:0 Ref_cnt:15 Vrf:7 <— rt –dump 7 would display routes in this VRF | |

| Flags:Valid, Policy, Etree Root, | |

| EncapFmly:0806 Oif:9 Len:14 | |

| Encap Data: 02 56 30 b9 50 0f 00 00 5e 00 01 00 08 00 |

[root@overcloud-novacompute-3 ~]# vif –get 9 Vrouter Interface Table

Flags: P=Policy, X=Cross Connect, S=Service Chain, Mr=Receive Mirror Mt=Transmit Mirror, Tc=Transmit Checksum Offload, L3=Layer 3, L2=Layer 2 D=DHCP, Vp=Vhost Physical, Pr=Promiscuous, Vnt=Native Vlan Tagged Mnp=No MAC Proxy, Dpdk=DPDK PMD Interface, Rfl=Receive Filtering Offload, Mon=Interface is Monitored Uuf=Unknown Unicast Flood, Vof=VLAN insert/strip offload, Df=Drop New Flows, L=MAC Learning Enabled Proxy=MAC Requests Proxied Always, Er=Etree Root, Mn=Mirror without Vlan Tag

vif0/9 OS: tap5630b950-0f Type:Virtual HWaddr:00:00:5e:00:01:00 IPaddr:10.99.2.132 <– AVI SE local IP facing GW Vrf:7 Mcast Vrf:7 Flags:PL3L2DEr QOS:-1 Ref:5 RX packets:3645649 bytes:575731728 errors:0 TX packets:4576199 bytes:614799104 errors:0 ISID: 0 Bmac: 02:56:30:b9:50:0f Drops:135

Data Plane for VIP

Same as for FIP (one of the MPLS labels is 58). The other one is different so we take it as example. MPLS label 64 on Compute-2.

[root@overcloud-novacompute-3 ~]# mpls –get 64 MPLS Input Label Map

| Label | Nexthop |

|---|---|

| 64 | 76 |

[root@overcloud-novacompute-2 ~]# nh –get 76 Id:76 Type:Composite Fmly: AF_INET Rid:0 Ref_cnt:2 Vrf:6 Flags:Valid, Policy, Ecmp, Etree Root, Valid Hash Key Parameters: Proto,SrcIP,SrcPort,DstIp,DstPort Sub NH(label): 83(52) 89(60) -1

COMPOSITE HERE MEANS THERE ARE 2 x SEs hosting the VS on this SAME COMPUTE

Id:83 Type:Encap Fmly: AF_INET Rid:0 Ref_cnt:14 Vrf:6 Flags:Valid, Etree Root, EncapFmly:0806 Oif:8 Len:14 Encap Data: 02 e0 8d fc bb 3b 00 00 5e 00 01 00 08 00

Id:89 Type:Encap Fmly: AF_INET Rid:0 Ref_cnt:9 Vrf:6 Flags:Valid, Etree Root, EncapFmly:0806 Oif:10 Len:14 Encap Data: 02 5a 13 9d 02 52 00 00 5e 00 01 00 08 00

[root@overcloud-novacompute-2 ~]# vif –get 8 Vrouter Interface Table

Flags: P=Policy, X=Cross Connect, S=Service Chain, Mr=Receive Mirror Mt=Transmit Mirror, Tc=Transmit Checksum Offload, L3=Layer 3, L2=Layer 2 D=DHCP, Vp=Vhost Physical, Pr=Promiscuous, Vnt=Native Vlan Tagged Mnp=No MAC Proxy, Dpdk=DPDK PMD Interface, Rfl=Receive Filtering Offload, Mon=Interface is Monitored Uuf=Unknown Unicast Flood, Vof=VLAN insert/strip offload, Df=Drop New Flows, L=MAC Learning Enabled Proxy=MAC Requests Proxied Always, Er=Etree Root, Mn=Mirror without Vlan Tag

vif0/8 OS: tape08dfcbb-3b Type:Virtual HWaddr:00:00:5e:00:01:00 IPaddr:10.99.2.133 <– SE1 on Compute2 local IP Vrf:6 Mcast Vrf:6 Flags:PL3L2DEr QOS:-1 Ref:5 RX packets:11544536 bytes:1814507024 errors:0 TX packets:12878176 bytes:1874633638 errors:0 ISID: 0 Bmac: 02:e0:8d:fc:bb:3b Drops:227

[root@overcloud-novacompute-2 ~]# vif –get 10 Vrouter Interface Table

Flags: P=Policy, X=Cross Connect, S=Service Chain, Mr=Receive Mirror Mt=Transmit Mirror, Tc=Transmit Checksum Offload, L3=Layer 3, L2=Layer 2 D=DHCP, Vp=Vhost Physical, Pr=Promiscuous, Vnt=Native Vlan Tagged Mnp=No MAC Proxy, Dpdk=DPDK PMD Interface, Rfl=Receive Filtering Offload, Mon=Interface is Monitored Uuf=Unknown Unicast Flood, Vof=VLAN insert/strip offload, Df=Drop New Flows, L=MAC Learning Enabled Proxy=MAC Requests Proxied Always, Er=Etree Root, Mn=Mirror without Vlan Tag

vif0/10 OS: tap5a139d02-52 Type:Virtual HWaddr:00:00:5e:00:01:00 IPaddr:10.99.2.134 <– SE2 on Compute2 local IP Vrf:6 Mcast Vrf:6 Flags:PL3L2DEr QOS:-1 Ref:5 RX packets:5947976 bytes:903242631 errors:0 TX packets:7227771 bytes:961166250 errors:0 ISID: 0 Bmac: 02:5a:13:9d:02:52 Drops:6

Confirming it via Contrail -> Configure -> Network -> Ports

Orchestrating AVI

Three ways:

- Neutron LBaaS => needs installing plugin and then provisioning / spawning instances can be done via HEAT and using Neutron LBaaS semantics

- Contrail LBaas => same, needs installing a plugin into Contrail

- AVI Native API (this provides the most features/flexibility) [API Guide] (https://avinetworks.com/docs/latest/api-guide/) or [AVI Heat Templates] (https://avinetworks.com/docs/18.1/avi-heat-resources/)